I had intended on talking about this but got caught on other issues. Thankfully, Andrew mentioned it on his blog and then everybody with a name provided several illuminating references. His blog entry is entitled An AI can build and try out statistical models using an open-ended generative grammar. You ought to read it as well as the attendant comments. Go ahead, I'll wait.

The different works mentioned in that blog entry are listed at the bottom of this entry [1,2,3] and were written by folks like Roger Grosse, Ruslan Salakhutdinov, Bill Freeman, Josh Tenenbaum, David Duvenaud, James Robert Lloyd, Zoubin Ghahramani, Gordon Wilson and Ryan Prescott Adams.

One of the reasons for the existence of the Matrix Factorization Jungle page revolves around the quite recent explosion of advanced factorizations enabled by new algorithms such as NMF, Robust PCA and so on, While the list is far from complete, there is a sense that it is just expanding and that it is indeed becoming quite a jungle out there: which one to choose for what problem is a recurring thought. The point of [1,2,3] is that, maybe, your data can be decomposed with a grammar like structure. What we usually study in datasets is whether or not they fit in a Robust PCA or NMF approach but one is seldom pushing the concept further. What [1,2,3] do is investigate the composition of several models to see how they fit different datasets. In particular, [3] talks about performing some sort of automated recursive matrix factorization. The key word here is automated as is one explore many several composition of these factorization and see which one provides a good explanatory framework.

from [3]

This is simply fascinating. Let's take for example Robust PCA. a factorization that is usually used for background subtraction work: what if after the decomposition of a data matrix M through robust PCA, e.g.

M = B + S + N,

where B is the background, S the sparse component and N a noise matrix,

one were to decompose further S through NMF e.g.

S = W F

where both W and F are positive matrices.

and then what if were to decompose further W into some dictionary learning decomposition, and so on.....

{3] presents a way to do this in some automated ways by producing all the scenarios possible and then have a mechanism to evaluate which one of these models is most appropriate.

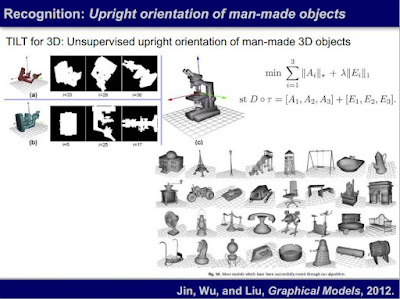

It will not have escaped the faithful reader of Nuit Blanche that Yi Ma and co-authors (see [4]) are building these models from scratch with an approach that is grounded in theoretical justification for the use of these transforms.

In effect, we are seeing two worlds colliding: on the one end, an approach that is purely data driven which may yield some unexpected corner of knowledge [1-3], on the other (and because the image data is probably more understood) an approach [4] focused on devising the best solvers and attendant admissibility conditions for a known end product.

While these approaches may look opposite, they are not. See, for a very large majority (if not all) solvers devised in the Matrix Factorization Jungle [5], they are actually dependent on a series of parameters such as sparsity level, rank number, number of iterations, termination criteria, etc....) that are simply equivalent to the parameters used in [1-3]. While there are few, they still exist. How could we expect the recursive approach of [3] to fit vision studies i.e. the type of work that Yi Ma et al usually investigate, from [3]:

For an example from vision, consider a matrix X, where each row is a small (e.g. 12 12) patch sampled from an image and vectorized. Image patches can be viewed as lying near a low-dimensional subspace spanned by the lowest frequency Fourier coefficients (Bossomaier and Snyder, 1986). This can be captured by the low-rank model GG + G. In a landmark paper, Olshausen and Field (1996) found that image patches are better modeled as a linear combination of a small number of components drawn from a larger dictionary. In other words, X is approximated as the product W A, where each row of A is a basis function, and W is a sparse matrix giving the linear reconstruction coefficients for each patch. By fitting this “sparse coding” model, they obtained a dictionary of oriented edges similar to the receptive fields of neurons in the primary visual cortex. If we apply Rule (5), we obtain a Bayesian version of sparse coding, (exp(G) G)G + G, similar to the model proposed by Berkes et al. (2008). Intuitively, the latent Gaussian coefficients are multiplied elementwise by “scale” variables to give a heavy-tailed distribution. Many researchers have designed models to capture the dependencies between these scale variables, and such “Gaussian scale mixture” models represent the state-of-the art for lowlevel vision tasks such as denoising (Portilla et al., 2003) and texture synthesis (Portilla and Simoncelli, 2000). One such GSM model is that of Karklin and Lewicki (2008), who fit a low-rank model to the scale variables. By applying Rule (1) to the sparse coding structure, we can represent their model in our framework as (exp(GG+G) G)G+G. This model has been successful at capturing higher-level textural properties of a scene and has properties similar to complex cells in the primary visual cortex.

In short, the main difference between the approach in [3] and that in the Matrix Factorization that we generally read on Nuit Blanche revolve around how much faster the solvers found in the Matrix Factorization Jungle page are. And then there is this issue of model selection after all the computations are performed, What sort of criteria do you use ?

I note from [3]

While elegant solutions exist for particular models, estimating the data marginal likelihood generically is still extremely difficult. At the other extreme, one can hold out a subset of the entries of the matrix and compute the mean squared error for predicting these entries. MSE is easier to implement, but we found that it was unable to distinguish many of the the more complex structures in our grammar.

I wonder if the MSE would be able to distinguish these modesl better if one were to use the solvers from the Matrix Factorization Jungle page. Which leads us to the following fleeting thought: How about picking solvers from the Jungle page and build a recursive grammar out of them ? would the exploration of the world through that grammar be competitive with the tools developed in [1-3] (and for which no implementation is currently available) ?

[1] Gaussian Process Covariance Kernels for Pattern Discovery and Extrapolation by Andrew Gordon Wilson, Ryan Prescott Adams

Gaussian processes are rich distributions over functions, which provide a Bayesian nonparametric approach to smoothing and interpolation. We introduce simple closed form kernels that can be used with Gaussian processes to discover patterns and enable extrapolation. These kernels are derived by modelling a spectral density -- the Fourier transform of a kernel -- with a Gaussian mixture. The proposed kernels support a broad class of stationary covariances, but Gaussian process inference remains simple and analytic. We demonstrate the proposed kernels by discovering patterns and performing long range extrapolation on synthetic examples, as well as atmospheric CO2 trends and airline passenger data. We also show that we can reconstruct standard covariances within our framework.

[2] Structure Discovery in Nonparametric Regression through Compositional Kernel Search by David Duvenaud, James Robert Lloyd, Roger B. Grosse, Joshua B. Tenenbaum, Zoubin Ghahramani

Despite its importance, choosing the structural form of the kernel in nonparametric regression remains a black art. We define a space of kernel structures which are built compositionally by adding and multiplying a small number of base kernels. We present a method for searching over this space of structures which mirrors the scientific discovery process. The learned structures can often decompose functions into interpretable components and enable long-range extrapolation on time-series datasets. Our structure search method outperforms many widely used kernels and kernel combination methods on a variety of prediction tasks.

[4] Sparse and Low-Dimensional Representation, Lecture 3: Modeling High-dimensional (Visual) Data, Yi MaThe recent proliferation of richly structured probabilistic models raises the question of how to automatically determine an appropriate model for a dataset. We investigate this question for a space of matrix decomposition models which can express a variety of widely used models from unsupervised learning. To enable model selection, we organize these models into a context-free grammar which generates a wide variety of structures through the compositional application of a few simple rules. We use our grammar to generically and efficiently infer latent components and estimate predictive likelihood for nearly 2500 structures using a small toolbox of reusable algorithms. Using a greedy search over our grammar, we automatically choose the decomposition structure from raw data by evaluating only a small fraction of all models. The proposedmethod typically finds the correct structure for synthetic data and backs off gracefully to simpler models under heavy noise. It learns sensible structures for datasets as diverse as image patches, motion capture, 20 Questions, and U.S. Senate votes, all using exactly the same code.

[5] The Matrix Factorization Jungle

1 comment:

I've seen similar, but much simpler, techniques based on context free grammars applied with a little success. It's so great to see a major advance in this area.

Post a Comment