Analysis Operator Learning and Its Application to Image Reconstruction by Simon Hawe, Martin Kleinsteuber, Klaus Diepold. The abstract reads:

Exploiting a priori known structural information lies at the core of many image reconstruction methods that can be stated as inverse problems. The synthesis model, which assumes that images can be decomposed into a linear combination of very few atoms of some dictionary, is now a well established tool for the design of image reconstruction algorithms. An interesting alternative is the analysis model, where the signal is multiplied by an analysis operator and the outcome is assumed to be the sparse. This approach has only recently gained increasing interest. The quality of reconstruction methods based on an analysis model severely depends on the right choice of the suitable operator.In this work, we present an algorithm for learning an analysis operator from training images. Our method is based on an $\ell_p$-norm minimization on the set of full rank matrices with normalized columns. We carefully introduce the employed conjugate gradient method on manifolds, and explain the underlying geometry of the constraints. Moreover, we compare our approach to state-of-the-art methods for image denoising, inpainting, and single image super-resolution. Our numerical results show competitive performance of our general approach in all presented applications compared to the specialized state-of-the-art techniques.

Simon also showed me what the Omega operators looked like after the learning process as I wanted to know how far they were from a simple derivative-TV operator. Here is what he had to say:

....On Page 12 Figure 3 you see the atoms of the operator we have learned within all our experiments [see above] . They look more like higher order derivatives than first order derivatives as in the TV case. We played a little bit around with the optimization, and if we introduce a prior which enforces the atoms themselves to be sparse than the output does highly look like TV, see the attached image.[see below]

After the Noise Aware Analysis Operator Learning for Approximately Cosparse Signals featured earlier this week, the collection of approach to learning these analysis operator is growing. Simon tells me their implementation should be out soon. A new era where the fields of signal processing and mathematical physics are colliding, is born.

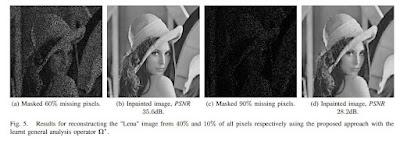

and yes, 90 percent missing pixels!

Thanks Simon

3 comments:

How about applying this to intrinsically noisy images, such as nuclear medicine images?

Absolutely!

Igor.

I'd like to see the 10% resolution image corresponding the 90% missing pixels image. A simple "nearest neighbour" resampling to remove the black areas would be the easy solution to this problem. So I wonder how "repainting" compares.

Post a Comment