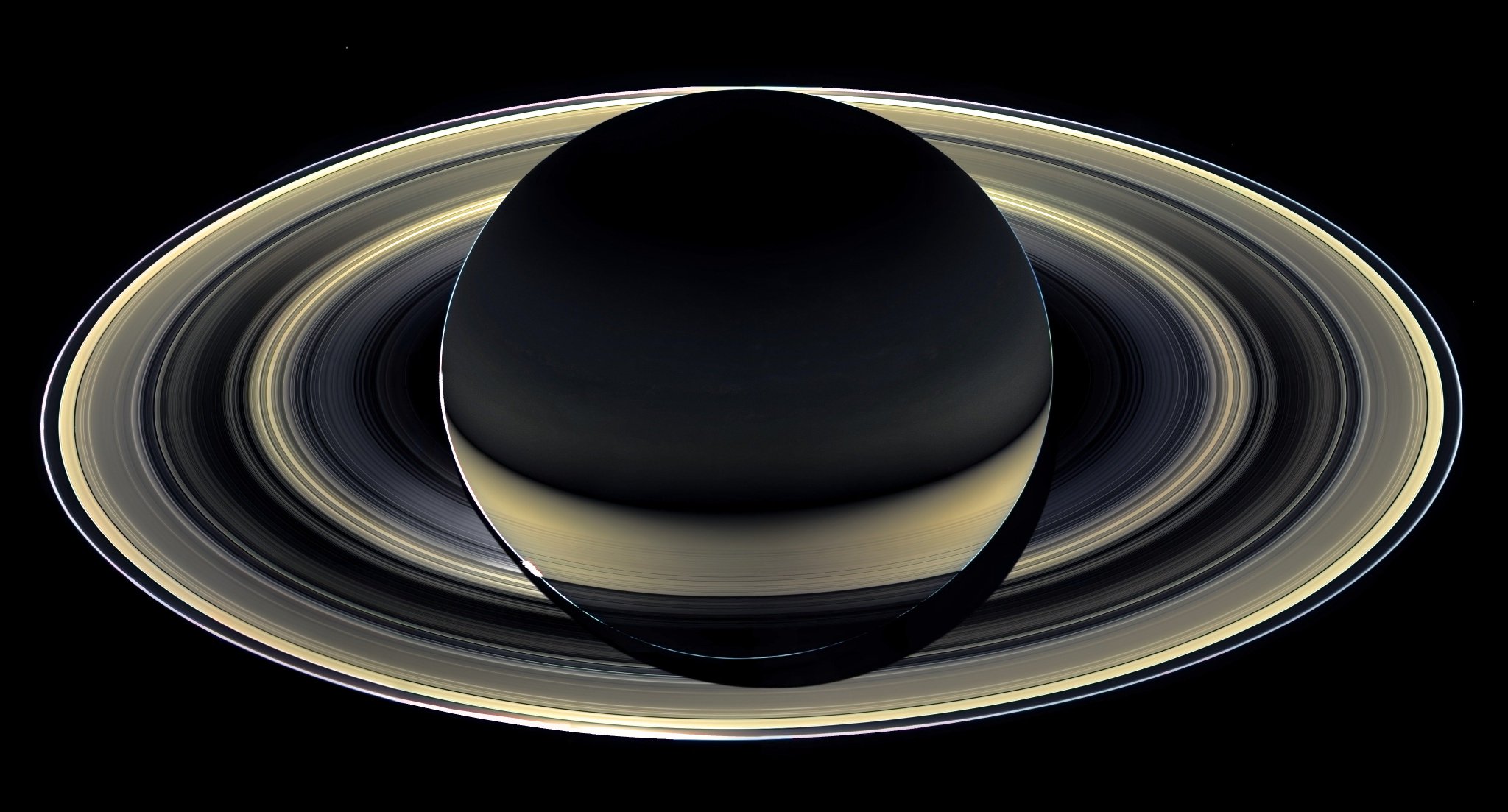

Image Credit: NASA/JPL-Caltech/Space Science Institute, Ian Regan

Nonlinear regression models are often used in statistics and machine learning due to greater accuracy than linear models. In this work, we present a novel modeling framework that is both computationally efficient for high-dimensional datasets, and predicts more accurately than most of the classic state-of-the-art predictive models. Here, we couple a nonlinear random Fourier feature data transformation with an intrinsically fast learning algorithm called Vowpal Wabbit or VW. The key idea we develop is that by introducing nonlinear structure to an otherwise linear framework, we are able to consider all possible higher-order interactions between entries in a string. The utility of our nonlinear VW extension is examined, in some detail, under an important problem in statistical genetics: genomic selection (i.e. the prediction of phenotype from genotype). We illustrate the benefits of our method and its robustness to underlying genetic architecture on a real dataset, which includes 129 quantitative heterogeneous stock mice traits from the Wellcome Trust Centre for Human Genetics.

No comments:

Post a Comment