- most interesting sets of documents are always beyond the latest largest context window that the cool kids talk about

- the reason we want a satisfying RAG is that we do not want to choose the documents that will come into the context window

- the current story is about text, get ready for images, voice and videos

- large context windows do not assure a level of recall quality

Late-interaction models take a different approach:

This simple but powerful insight has sparked an open-source ecosystem that’s now shaping both academic research and production-scale AI systems.

PyLate: From Experimental Code to Peer-Reviewed Paper

PyLate began as an internal experiment to simplify multi-vector training. Today, it’s a full-fledged library with 527 GitHub stars and growing adoption.

if you want to learn more about the library: PyLate documentation

ModernBERT: Re-Imagining the Encoder

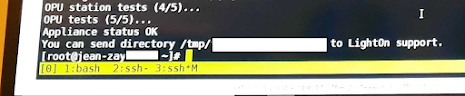

In partnership with Answer.AI, LightOn co-developed ModernBERT, a model that fundamentally rethinks encoder architecture.

ModernBERT has already been cited 305+ times, with variants like BioClinical ModernBERT emerging for healthcare applications.

👉 Explore: ModernBERT LightOn blog post

FastPlaid: Performance That Scales

Building great models is only half the challenge, making them work in production is the other. That’s where FastPlaid comes in.

As Raphael Sourty explains, static indexes solve many use cases, but mutable indexes (new in v1.10.0) unlock real-world applications where data evolves continuously.

👉 Read more: FastPlaid LightOn blogpost

PyLate-rs: Retrieval in the Browser

Finally, to push accessibility even further, PyLate-rs compiles late-interaction inference to WebAssembly (WASM).

That means:

This lowers the barrier for demos, education, and lightweight deployments, proving late-interaction isn’t just powerful, it’s portable.

From Theory to Production: A Movement

Taken together, these projects form a technical symphony:

The ecosystem has grown from an academic curiosity into a reasoning-first retrieval stack. With recognition at CIKM and ACL, adoption across GitHub and HuggingFace, and practical tools for real-world workflows, LightOn is helping shape the next era of AI search.

📖 Explore LightOn’s open-source ecosystem:

Neural ranking has become a cornerstone of modern information retrieval. While single vector search remains the dominant paradigm, it suffers from the shortcoming of compressing all the information into a single vector. This compression leads to notable performance degradation in out-of-domain, long-context, and reasoning-intensive retrieval tasks. Multi-vector approaches pioneered by ColBERT aim to address these limitations by preserving individual token embeddings and computing similarity via the MaxSim operator. This architecture has demonstrated superior empirical advantages, including enhanced out-of-domain generalization, long-context handling, and performance in complex retrieval scenarios. Despite these compelling empirical results and clear theoretical advantages, the practical adoption and public availability of late interaction models remain low compared to their single-vector counterparts, primarily due to a lack of accessible and modular tools for training and experimenting with such models. To bridge this gap, we introduce PyLate, a streamlined library built on top of Sentence Transformers to support multi-vector architectures natively, inheriting its efficient training, advanced logging, and automated model card generation while requiring minimal code changes to code templates users are already familiar with. By offering multi-vector-specific features such as efficient indexes, PyLate aims to accelerate research and real-world application of late interaction models, thereby unlocking their full potential in modern IR systems. Finally, PyLate has already enabled the development of state-of-the-art models, including GTE-ModernColBERT and Reason-ModernColBERT, demonstrating its practical utility for both research and production environments.

Follow @NuitBlog or join the CompressiveSensing Reddit, the Facebook page, the Compressive Sensing group on LinkedIn or the Advanced Matrix Factorization group on LinkedIn

Other links:

Paris Machine Learning: Meetup.com||@Archives||LinkedIn||Facebook|| @ParisMLGroup About LightOn: Newsletter ||@LightOnIO|| on LinkedIn || on CrunchBase || our Blog

About myself: LightOn || Google Scholar || LinkedIn ||@IgorCarron ||Homepage||ArXiv

.png)

.png)