From the

Rice Compressive Sensing resource site, here is a new paper:

Compressive Imaging of Color Images by Pradeep Nagesh and

Baoxin Li. The abstract reads:

In this paper, we propose a novel compressive imaging framework for color images. We first introduce an imaging architecture based on combining the existing single-pixel Compressive Sensing (CS) camera with a Bayer color filter, thereby enabling acquisition of compressive color measurements. Then we propose a novel CS reconstruction algorithm that employs joint sparsity models in simultaneously recovering the R, G, B channels from the compressive measurements. Experiments simulating the imaging and reconstruction procedures demonstrate the feasibility of the proposed idea and the superior quality in reconstruction.

It looks like the RGB encoding could also

be useful for smell encoding as well. When are we going to see a compressive sensing nose ? The bayer pattern reminds me of a

previous post on retinal cells.

On Friday, March 13, 2009,

Hongkai Zhao will give a talk at Stanford on "

A fast solver for radiative transport equation and applications in optical imaging". The Abstract reads:

I will present an efficient forward solver for steady-state or frequency-domain radiative transfer equation (RTE) on 2D and 3D structured and unstructured meshes with vacuum boundary condition or reflection boundary condition. Due to high dimensionality the key issue is to construct an efficient iterative scheme that can deal with various behaviors of the solution in different regimes, such as transport, diffusion, optical thick, and forward peaking . In our algorithm we use a direct angular discretization and upwind type of spatial discretization that preserves properties of both scattering and differential operators of RTE. To solve the large linear system after discretization, we construct an efficient iterative scheme based on Gauss-Seidel and proper angular dependent ordering. With this iterative scheme as the relaxation we implement various multigrid methods in both angular and physical space. Our algorithm can deal with different scattering regimes efficiently. Efficiency and accuracy of our algorithm is demonstrated by comparison with both analytical solutions and Monte Carlo solutions, and various numerical tests in optical imaging. Based on this algorithm, a multi-level imaging algorithm is developed for optical tomorgraphy. If time permits, I will also talk about recent work on compressive sensing (CS) in bioluminescence tomography (BLT) based on RTE. We show that direct use of Green's function of RTE as the basis and standard measurement data for reconstruction will not satisfy the restricted isometry property (RIP). We propose an algorithm to transform the standard setup into a new system that satisfies the RIP. Hence, with compressive sensing method, we produce much improved results over standard reconstruction method.

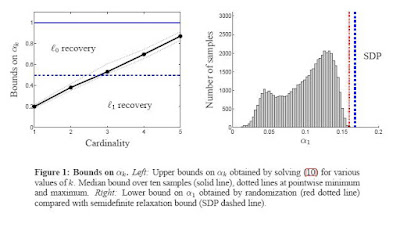

Both programs check a condition on a constant \alpha_k. The study of the value of \alpha_k with small matrices could allow one to investigate low order models as well as specific dictionaries.... These two programs are prominently listed in the

measurement matrix section of the Big Picture.

, there will be a talk on Compressive Sampling by

Brian Robinson, Center for Applied Optics, UA Huntsville, February 27, 2009, 219 Shelby Center, 3:00 (Refreshements at 2:30). The abstract of his talk is:

Conventional sampling is ruled by the Whittaker-Shannon Theorem, which states that, in order to faithfully recover a signal from a set of discrete samples, the samples must be taken with a frequency (the Nyquist frequency) which is twice the highest frequency present in the original signal. Under this regime, the sampling function is the classical comb function, consisting of a train of delta functions equally spaced with a period that is the reciprocal of twice the highest frequency in the signal. This principle underlies nearly all signal sampling protocols used in contemporary electronic devices. As it turns out, however, this method of sampling is not optimal. In other words, there are other methods that require far fewer samples than sampling with the canonical "spike" basis of W-S theory. Compressed Sensing (CS) is a novel sensing/sampling paradigm that goes against the common wisdom in data acquisition in that it demonstrates the feasibility of reconstructing data sets to a high degree of fidelity (and even exactly), with far fewer samples than is required by the W-S sampling paradigm. It is important to note that the methods are also robust to noise. CS relies on two related phenomena to make this possible: sparseness and incoherence. The properties of CS were largely discovered by applied mathematicians working in the field of probability theory and it has profound implications for practical systems such as optical imaging systems. In this talk we will illuminate the ideas of CS theory, discuss the roles of sparseness and incoherence, talk about the relationship that randomness plays in constructing a suitable set of sensing waveforms, and talk about the practical applications of this theory to modern sensing systems.

I got a request recently and the reason I am posting it is because I believe that many would be beginners have exactly the same question:

I have problems on how to calculate the coherence of CS, especially on sampling partial fouier coefficients and renconstruct the image in wavelet domain. This problem is specific for sparse MRI.

argmin ||x|| s.t. y=phi*F*psi*x

This equation is for noise-free MRI.

psi is the sparsifying transform,e.g.wavelet, F is the fourier operator, phi is the random sampling mask in MRI. Often, we acquire the raw data in MRI scanner according to phi.*mask.

For a normal MRI image with size 512*512,

My problems are:

1. Dictionary of visible basis of 2D wavelet or 2D fourier are very large, since each basis is equal to the size of image 512*512, which is 262144 as a long column, I do not think it is possible to construct the wavelet basis W and fourier basis F directly. Do you have any comments on this ? I am considering the @operator in matlab.

2. For sparse MRI, A=phi*F*psi, am I right ?

I am puzzled on the questions for a lot time and read related literatures many times.

Could you provide me some help on this? Did you collect the code for caculating the coherence for large data.

To what I responded:

From arxiv,

John Langford has followed on his thoughts on error correcting codes (see

his first comment when I guest blogged on his blog). The paper is

Multi-Label Prediction via Compressed Sensing by

Daniel Hsu,

Sham Kakade,

John Langford,

Tong Zhang. The abstract reads:

We consider multi-label prediction problems with large output spaces under the assumption of output sparsity -- that the target vectors have small support. We develop a general theory for a variant of the popular ECOC (error correcting output code) scheme, based on ideas from compressed sensing for exploiting this sparsity. The method can be regarded as a simple reduction from multi-label regression problems to binary regression problems. It is shown that the number of subproblems need only be logarithmic in the total number of label values, making this approach radically more efficient than others. We also state and prove performance guarantees for this method, and test it empirically.

In a different direction, not specifically related to CS but hinting on ways to do a better job of calibrating a Random lens Imager here is a paper by

Kyle Herrity,

Raviv Raich and

Alfred Hero, entitled

Blind reconstruction of sparse images with unknown point spread function. The abstract reads:

We consider the image reconstruction problem when the original image is assumed to be sparse and when partial knowledge of the point spread function (PSF) is available. In particular, we are interested in recovering the magnetization density given magnetic resonance force microscopy (MRFM) data, and we present an iterative alternating minimization algorithm (AM) to solve this problem. A smoothing penalty is introduced on allowable PSFs to improve the reconstruction. Simulations demonstrate its performance in reconstructing both the image and unknown point spread function. In addition, we develop an optimization transfer approach to solving a total variation (TV) blind deconvolution algorithm presented in a paper by Chan and Wong. We compare the performance of the AM algorithm to the blind TV algorithm as well as to a TV based majorization-minimization algorithm developed by Figueiredo et al.

We don't

many non-linear results but this is one:

Sparse Regularization with lq Penalty Term by

Markus Grasmair,

Markus Haltmeier and

Otmar Scherzer. The abstract reads:

We consider the stable approximation of sparse solutions to non-linear operator equations by means of Tikhonov regularization with a subquadratic penalty term. Imposing certain assumptions, which for a linear operator are equivalent to the standard range condition, we derive the usual convergence rate O(sqrt(\delta) of the regularized solutions in dependence of the noise level \delta Particular emphasis lies on the case, where the true solution is known to have a sparse representation in a given basis. In this case, if the differential of the operator satisfies a certain injectivity condition, we can show that the actual convergence rate improves up to O(\delta).

Credit photo : me and using affine matching with ASIFT (

http://mw.cmla.ens-cachan.fr/megawave/demo/asift ) to show the correspondance between two pictures translated from one another.