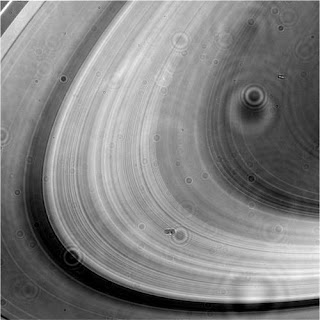

Andriyan Suksmono asked me why on a previous post, the image of Cassini showing Saturn and its rings had these Airy-like circles. So I went on Twitter and asked Phil Plait who eventually pointed to an entry that Emily Lakdawalla wrote at the Planetary Society Blog wrote on the 20th. So it looks like it is an artifact of dust on one of the filter of the camera. While one could look at it as an inconvenience let us look at it as an opportunity: since the dust does not move, could we obtain some sort of superresolution since we also have plenty of images to do some sort of calibration. Any thoughts ?

Other images from the same camera do not show these "defects" how can that this be explained ?

Recently Deming Yuan (Vincent), a reader of this blog, wrote me the following:

Recently, a problem puzzled me : when some elements of the measuring matrix A are missing , can we still recover the sparse vector ? If the answer is positive, does there exist an upper bound of the number of missing measurements? Is there any paper about this topic?To what I replied

I think finding all the elements is something difficult (see below). Finding some elements, I don't know. You may want to check the work of Dror Baron and Yaron Rachlin on the secrecy of cs measurements:

http://nuit-blanche.blogspot.com/2008/10/cs-secrecy-of-cs-measurements-counting.html

But in fact, I think I am somewhat off the mark giving that answer since Dror and Yaron are not answering that problem. Does any of you have a better answer for Deming/Vincent?

Here are two presentations of paper mentioned previously:

- Dynamic updating for sparse time varying signals by M. Salman Asif and Justin Romberg.

- Recovery of Compressible Signals in Unions of Subspaces by Marco Duarte, Chinmay Hegde, Volkan Cevher, and Richard Baraniuk

Gabriel Peyre has compiled a long list of "numerical tours". Two of these tours involve Compressive Sensing, namely:

Split Bregman Methods and Frame Based Image Restoration by Jian-Feng Cai, Stanley Osher, and Zuowei Shen. The abstract reads:

Split Bregman methods introduced in [47] have been demonstrated as efficient tools to solve various problems arising in total variation (TV) norm minimizations of partial differential equation models for image restoration, such as image denoising and magnetic resonance imaging (MRI) reconstruction from sparse samples. In this paper, we prove that the split Bregman iterations, where the number of inner iterations is fixed to be one, converge. Furthermore, we show that these split Bregman iterations can be used to solve minimization problems arising from the analysis based approach for image restoration in the literature. Finally, we apply these split Bregman iterations to the analysis based image restoration approach whose analysis operator is derived from tight framelets constructed in [59]. This gives a set of new frame based image restoration algorithms that cover several topics in image restorations, such as image denoising, deblurring, inpainting and cartoon-texture image decomposition. Several numerical simulation results are provided.

What are Good Apertures for Defocus Deblurring? by Changyin Zhou and Shree Nayar, The asbtract reads :

In recent years, with camera pixels shrinking in size, images are more likely to include defocused regions. In order to recover scene details from defocused regions, deblurring techniques must be applied. It is well known that the quality of a deblurred image is closely related to the defocus kernel, which is determined by the pattern of the aperture. The design of aperture patterns has been studied for decades in several fields, including optics, astronomy, computer vision, and computer graphics. However, previous attempts at designing apertures have been based on intuitive criteria related to the shape of the power spectrum of the aperture pattern. In this paper, we present a comprehensive framework for evaluating an aperture pattern based on the quality of deblurring. Our criterion explicitly accounts for the effects of image noise and the statistics of natural images. Based on our criterion, we have developed a genetic algorithm that converges very quickly to near-optimal aperture patterns. We have conducted extensive simulations and experiments to compare our apertures with previously proposed ones.

This is not compressive sensing per se, however it is coded aperture that uses many different configuration. The authors use a linear reconstruction algorithm (a very fast one at that) and there are some interesting notes about the psf being location dependent. In the conclusion, one can read:

This is interesting as it gets us back to the beginning of this entry.Diffraction is another important issue that requires further investigation. Our work, as well as previous works on coded apertures, have avoided having to deal with diffraction by simply using low-resolution aperture patterns. By explicitly modeling diffraction effects, we may be able to find even better aperture patterns for defocus deblurring.

The LinkedIn group on Compressive Sensing has now 135 members.

In a different area, forget about hyperspectral imagery, looks like the human eye and the panchromatic filter of Geo-Eye images displayed on Google Earth is enough according to this Cnet article:

A thief in the UK used Google Earth to search the roofs of churches and schools to find lead roof tiles that he could then steal. A friend of the thief said, "He could tell the lead roofs apart on Google Earth, as they were slightly darker than normal."

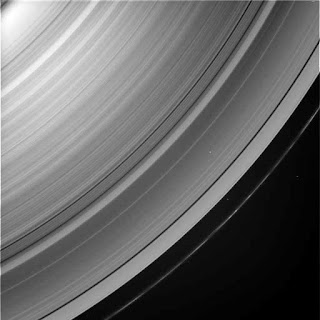

Image Credit: NASA/JPL/Space Science Institute.

No comments:

Post a Comment