Much happened this past month since the last Nuit Blanche in Review (June 2016). We submitted a proposal for a two day workshop at NIPS on "Mapping Machine Learning to Hardware" (the list of proposed speakers is included in the post), we featured two proofs on the same problem from two different groups (the third proof being on a different problem altogether), one implementation, a survey and a video on randomized methods, some exciting results on random projections in manifolds and in practice with deep learning, a continuing set of posts showing the Great Convergence in action, a few applications centered posts and much more.... Enjoy. In the meantime, the image above shows the current state of reactor 2 at Fukushima Daiichi. That image uses Muon tomography and a reconstruction algorithm we mentioned back in Imaging Damaged Reactors and Volcanoes. Without further ado.

Implementation

Proofs

- The Replica-Symmetric Prediction for Compressed Sensing with Gaussian Matrices is Exact

- Mutual information for symmetric rank-one matrix estimation: A proof of the replica formula / The Mutual Information in Random Linear Estimation

- The non-convex Burer-Monteiro approach works on smooth semidefinite programs / On the low-rank approach for semidefinite programs arising in synchronization and community detection

Thesis:

Survey:

Hardware and related

- DeepBinaryMask: Learning a Binary Mask for Video Compressive Sensing

- Single-shot diffuser-encoded light field imaging

In-Depth

Random Projections

- A Powerful Generative Model Using Random Weights for the Deep Image Representation

- Time for dithering: fast and quantized random embeddings via the restricted isometry property

- Random projections of random manifolds

Random Features

Connection between compressive sensing and Deep Learning

- Group Sparse Regularization for Deep Neural Networks

- Onsager-corrected deep learning for sparse linear inverse problems

AMP/FrankWolfe/ Hashing/Deep Learning

- An approximate message passing approach for compressive hyperspectral imaging using a simultaneous low-rank and joint-sparsity prior

- Stochastic Frank-Wolfe Methods for Nonconvex Optimization

- gvnn: Neural Network Library for Geometric Computer Vision

- Dual Purpose Hashing

Application centered

- Gene expression prediction using low-rank matrix completion

- Streaming algorithms for identification of pathogens and antibiotic resistance potential from real-time MinION (TM) sequencing

- Of Li-Ion Batteries and Biochemical networks: Finding a Low Dimensional Models in Haystacks

Videos:

- Saturday Morning Video: On the Expressive Power of Deep Learning: A Tensor Analysis, Nadav Cohen, Or Sharir, Amnon Shashua @ COLT2016

- Saturday Morning Video: Benefits of depth in neural networks, Matus Telgarsky @ COLT2016

- Saturday Morning Video: The Power of Depth for Feedforward Neural Networks by Ohad Shamir @ COLT2016

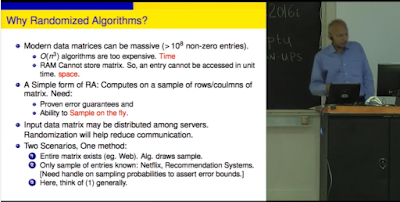

- Saturday Morning Video: Randomized Algorithms in Linear Algebra, Ravi Kannan @ COLT2016

- Jobs: 2 Postdocs, LIONS lab @ EPFL

- CSjob: Two Postdocs, C-SENSE: Exploiting low dimensional signal models for sensing, computation and processing, Edinburgh,, Scotland.

- CSjob: Postdoc, Signal processing & inverse problems for the future SKA, Nice, France

LightOn:

Credit photo: TEPCO