Following up on meetup #6 Season 2, John Langford just gave us a tutorial presentation on Vowpal Wabbit this morning in Paris (Thank you Christophe and AXA for hosting us). Here are the slides:

Here is some additional relevant background material.

- The wiki: https://github.com/

JohnLangford/vowpal_wabbit/ wiki - The mailing list: https://groups.yahoo.com/neo/

groups/vowpal_wabbit/info - Learning to search framework: http://arxiv.org/abs/1406.1837

- Learning to search algorithm: http://arxiv.org/abs/1502.

02206 - Learning reductions survey: http://arxiv.org/abs/1502.

02704 - Learning to interact tutorial: http://hunch.net/~jl/interact.

pdf - Most recent exciting paper: http://arxiv.org/abs/1402.0555

- Feature Hashing: http://arxiv.org/abs/0902.2206

- Terascale learning: http://arxiv.org/abs/1110.4198

- Many of the lectures in the large scale learning class with Yann @NYU: http://cilvr.cs.nyu.edu/doku.

php?id=courses:bigdata:slides: start - Hash Kernels, http://jmlr.org/proceedings/papers/v5/shi09a/shi09a.pdf

Much like the example Leon Bottou gave us on counterfactual reasoning, ( see his slides: Learning to Interact ) a year ago. I very much liked the exploration bit for policies evaluation: if you don't explore you just don't know and prediction errors are not controlled exploration.

which will be the subject of John's presentation at ICML in Lille next week:

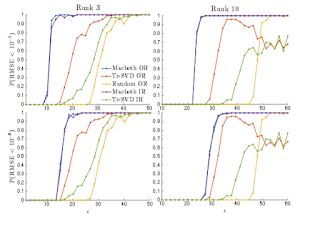

Methods for learning to search for structured prediction typically imitate a reference policy, with existing theoretical guarantees demonstrating low regret compared to that reference. This is unsatisfactory in many applications where the reference policy is suboptimal and the goal of learning is to improve upon it. Can learning to search work even when the reference is poor? We provide a new learning to search algorithm, LOLS, which does well relative to the reference policy, but additionally guarantees low regret compared to deviations from the learned policy: a local-optimality guarantee. Consequently, LOLS can improve upon the reference policy, unlike previous algorithms. This enables us to develop structured contextual bandits, a partial information structured prediction setting with many potential applications.