A curated list of resources on implicit neural representations, inspired by awesome-computer-vision. Work-in-progress.

This list does not aim to be exhaustive, as implicit neural representations are a rapidly evolving & growing research field with hundreds of papers to date.

Instead, this list aims to list papers introducing key concepts & foundations of implicit neural representations across applications. It's a great reading list if you want to get started in this area!

For most papers, there is a short summary of the most important contributions.

Disclosure: I am an author on the following papers:

- Scene Representation Networks: Continuous 3D-Structure-Aware Neural Scene Representations

- MetaSDF: MetaSDF: Meta-Learning Signed Distance Functions

- Implicit Neural Representations with Periodic Activation Functions

- Inferring Semantic Information with 3D Neural Scene Representations

What are implicit neural representations?Implicit Neural Representations (sometimes also referred to coordinate-based representations) are a novel way to parameterize signals of all kinds. Conventional signal representations are usually discrete - for instance, images are discrete grids of pixels, audio signals are discrete samples of amplitudes, and 3D shapes are usually parameterized as grids of voxels, point clouds, or meshes. In contrast, Implicit Neural Representations parameterize a signal as a continuous function that maps the domain of the signal (i.e., a coordinate, such as a pixel coordinate for an image) to whatever is at that coordinate (for an image, an R,G,B color). Of course, these functions are usually not analytically tractable - it is impossible to "write down" the function that parameterizes a natural image as a mathematical formula. Implicit Neural Representations thus approximate that function via a neural network.

Why are they interesting?Implicit Neural Representations have several benefits: First, they are not coupled to spatial resolution anymore, the way, for instance, an image is coupled to the number of pixels. This is because they are continuous functions! Thus, the memory required to parameterize the signal is independent of spatial resolution, and only scales with the complexity of the underyling signal. Another corollary of this is that implicit representations have "infinite resolution" - they can be sampled at arbitrary spatial resolutions.

This is immediately useful for a number of applications, such as super-resolution, or in parameterizing signals in 3D and higher dimensions, where memory requirements grow intractably fast with spatial resolution.

However, in the future, the key promise of implicit neural representations lie in algorithms that directly operate in the space of these representations. In other words: What's the "convolutional neural network" equivalent of a neural network operating on images represented by implicit representations? Questions like these offer a path towards a class of algorithms that are independent of spatial resolution!..........

Pages

▼

Tuesday, December 29, 2020

The Awesome Implicit Neural Representations Highly Technical Reference Page

** Nuit Blanche is now on Twitter: @NuitBlog **

Here is a new curated page on the topic of Implicit Neural Representations aptly called Awesome Implicit Neural Representations. It is curated by Vincent Sitzmann (@vincesitzmann) and has been added to the Highly Technical Reference Page:

From the page:

Follow @NuitBlog or join the CompressiveSensing Reddit, the Facebook page, the Compressive Sensing group on LinkedIn or the Advanced Matrix Factorization group on LinkedIn

Other links:

Paris Machine Learning: Meetup.com||@Archives||LinkedIn||Facebook|| @ParisMLGroup

About LightOn: Newsletter ||@LightOnIO|| on LinkedIn || on CrunchBase || our Blog

Monday, December 21, 2020

Hardware Beyond Backpropagation: a Photonic Co-Processor for Direct Feedback Alignment

** Nuit Blanche is now on Twitter: @NuitBlog **

Liked this entry ? subscribe to Nuit Blanche's feed, there's more where that came from. You can also subscribe to Nuit Blanche by Email.

Liked this entry ? subscribe to Nuit Blanche's feed, there's more where that came from. You can also subscribe to Nuit Blanche by Email.

We presented this work at the Beyond Backpropagation workshop at NeurIPS. A great conjunction between computational hardware and algorithm!

Hardware Beyond Backpropagation: a Photonic Co-Processor for Direct Feedback Alignment by Julien Launay, Iacopo Poli, Kilian Müller, Gustave Pariente, Igor Carron, Laurent Daudet, Florent Krzakala, Sylvain Gigan

The scaling hypothesis motivates the expansion of models past trillions of parameters as a path towards better performance. Recent significant developments, such as GPT-3, have been driven by this conjecture. However, as models scale-up, training them efficiently with backpropagation becomes difficult. Because model, pipeline, and data parallelism distribute parameters and gradients over compute nodes, communication is challenging to orchestrate: this is a bottleneck to further scaling. In this work, we argue that alternative training methods can mitigate these issues, and can inform the design of extreme-scale training hardware. Indeed, using a synaptically asymmetric method with a parallelizable backward pass, such as Direct Feedback Alignement, communication needs are drastically reduced. We present a photonic accelerator for Direct Feedback Alignment, able to compute random projections with trillions of parameters. We demonstrate our system on benchmark tasks, using both fully-connected and graph convolutional networks. Our hardware is the first architecture-agnostic photonic co-processor for training neural networks. This is a significant step towards building scalable hardware, able to go beyond backpropagation, and opening new avenues for deep learning.

Follow @NuitBlog or join the CompressiveSensing Reddit, the Facebook page, the Compressive Sensing group on LinkedIn or the Advanced Matrix Factorization group on LinkedIn

Other links:

Paris Machine Learning: Meetup.com||@Archives||LinkedIn||Facebook|| @ParisMLGroup

About LightOn: Newsletter ||@LightOnIO|| on LinkedIn || on CrunchBase || our Blog

Saturday, December 19, 2020

Diffraction-unlimited imaging based on conventional optical devices

** Nuit Blanche is now on Twitter: @NuitBlog **

Aurélien sent me an email back in October and we are now in December! Time flies.

Dear Igor,I hope things are well.I have been following your NuitBlanche blog for quite a few years. It would thus be great for us if you consider a recent paper of ours to appear in your blog, entitled “Diffraction-unlimited imaging based on conventional optical devices”. This paper has been published in Optics Express this year and its link is: https://www.osapublishing.org/oe/abstract.cfm?uri=oe-28-8-11243This manuscript proposes a new imaging paradigm for objects that are too far away to be illuminated or accessed, which allows them to be resolved beyond the limit of diffraction---which is thus distinct from the microscopy setting. Our concept involves an easy-to-implement acquisition procedure where a spatial light modulator (SLM) is placed some distance from a conventional optical device. After acquisition of a sequence of images for different SLM patterns, the object is reconstructed numerically. The key novelty of our acquisition approach is to ensure that the SLM modulates light before information is lost due to diffraction.Feel free to let us know what you think, and happy to provide more information/pictures if needed. Thanks a lot for your time and consideration!Best regards,Aurélien Bourquard

Thank you Aurélien!

Here is the paper's abstract:

Diffraction-unlimited imaging based on conventional optical devices by Nicolas Ducros and Aurélien Bourquard

We propose a computational paradigm where off-the-shelf optical devices can be used to image objects in a scene well beyond their native optical resolution. By design, our approach is generic, does not require active illumination, and is applicable to several types of optical devices. It only requires the placement of a spatial light modulator some distance from the optical system. In this paper, we first introduce the acquisition strategy together with the reconstruction framework. We then conduct practical experiments with a webcam that confirm that this approach can image objects with substantially enhanced spatial resolution compared to the performance of the native optical device. We finally discuss potential applications, current limitations, and future research directions.

I note that Aurélien has also published some exciting research on Differential Imaging Forensics. His co-author Nicolas has also some interesting work on Single Pixel cameras.

Follow @NuitBlog or join the CompressiveSensing Reddit, the Facebook page, the Compressive Sensing group on LinkedIn or the Advanced Matrix Factorization group on LinkedIn

Other links:

Paris Machine Learning: Meetup.com||@Archives||LinkedIn||Facebook|| @ParisMLGroup

About LightOn: Newsletter ||@LightOnIO|| on LinkedIn || on CrunchBase || our Blog

Wednesday, December 09, 2020

LightOn at #NeurIPS2020

** Nuit Blanche is now on Twitter: @NuitBlog **

We live in interesting times!

A combination of post-Moore’s law era and the advent of very large ML models require all of us to think up new approaches to computing hardware and AI algorithms at the same time. LightOn is one of the few (20) companies in the world publishing in both AI and hardware venues to engage both communities into thinking how theories and workflows may eventually be transformed by the photonic technology we develop.

This year, thanks to the awesome Machine Learning team at LightOn, we have two accepted papers at NeurIPS, the AI flagship conference, and have five papers in its“Beyond Backpropagation” satellite workshop that will take place on Saturday. This is significant on many levels, not the least being that these papers have been nurtured and spearheaded by two Ph.D. students (Ruben Ohana and Julien Launay) who are doing their thesis as LightOn engineers.

Here is the list of the different papers accepted at NeurIPS this year that involved LightOn members:

- Reservoir Computing meets Recurrent Kernels and Structured Transforms, Jonathan Dong, Ruben Ohana, Mushegh Rafayelyan, Florent Krzakala. Links: Oral, poster, paper (presenter: Ruben Ohana). Poster Session 4 on Wed, Dec 9th, 2020 @ 18:00–20:00 CET. GatherTown: Deep learning ( Town E1 — Spot C0 ) Join GatherTown. Only if and only if poster is crowded, join Zoom

- Direct Feedback Alignment Scales to Modern Deep Learning Tasks and Architectures, Jonathan Dong, Ruben Ohana, Mushegh Rafayelyan, Florent Krzakala. Links: Poster, paper (Presenter: Julien Launay). Poster Session 6, on Thu, Dec 10th, 2020 @ 18:00–20:00 CET. GatherTown: Neuroscience and Cognitive Science ( Town A3 — Spot B0 )

And at the NeurIPS Beyond Backpropagation workshop taking place on Saturday, December 12:

- Hardware Beyond Backpropagation: a Photonic Co-Processor for Direct Feedback Alignment, Julien Launay, Iacopo Poli, Kilian Muller, Igor Carron, Laurent Daudet, Florent Krzakala, Sylvain Gigan

- Direct Feedback Alignment Scales to Modern Deep Learning Tasks and Architectures, Julien Launay, François Boniface, Iacopo Poli, Florent Krzakala (Presenter: Julien Launay).

- Ignorance is Bliss: Adversarial Robustness by Design through Analog Computing and Synaptic Asymmetry, Alessandro Cappelli, Ruben Ohana, Julien Launay, Iacopo Poli, Florent Krzakala (Presenter: Alessandro Cappelli). We had a blog post on this recently.

- Align, then Select: Analysing the Learning Dynamics of Feedback Alignment, Maria Refinetti, Stéphane d’Ascoli, Ruben Ohana, Sebastian Goldt paper (Presenter: Ruben Ohana).

- How and When does Feedback Alignment Work, Stéphane d’Ascoli, Maria Refinetti, Ruben Ohana, Sebastian Goldt. paper (Presenter: Ruben Ohana)

Some of these presentations are given in French at the “Déjeuners virtuels de NeurIPS”

Follow @NuitBlog or join the CompressiveSensing Reddit, the Facebook page, the Compressive Sensing group on LinkedIn or the Advanced Matrix Factorization group on LinkedIn

Liked this entry ? subscribe to Nuit Blanche's feed, there's more where that came from. You can also subscribe to Nuit Blanche by Email.

Liked this entry ? subscribe to Nuit Blanche's feed, there's more where that came from. You can also subscribe to Nuit Blanche by Email.

Other links:

Paris Machine Learning: Meetup.com||@Archives||LinkedIn||Facebook|| @ParisMLGroup About LightOn: Newsletter ||@LightOnIO|| on LinkedIn || on CrunchBase || our Blog

About myself: LightOn || Google Scholar || LinkedIn ||@IgorCarron ||Homepage||ArXiv

Other links:

Paris Machine Learning: Meetup.com||@Archives||LinkedIn||Facebook|| @ParisMLGroup About LightOn: Newsletter ||@LightOnIO|| on LinkedIn || on CrunchBase || our Blog

About myself: LightOn || Google Scholar || LinkedIn ||@IgorCarron ||Homepage||ArXiv

Wednesday, October 14, 2020

Weight Agnostic Neural Networks, a virtual presentation by Adam Gaier, Thursday October 15th, LightOn AI meetup #7

** Nuit Blanche is now on Twitter: @NuitBlog **

Ever since we started LightOn, we have been putting some emphasis on having great minds think how new algorithms are possible and how they can be enabled with our photonic chips. We also have a regular meetup where we see how other great minds are devising new algorithms.

Tomorrow, Thursday (October 15th) we are continuing this journey by having Adam Gaier who will talk to us about Weight Agnostic Neural Networks. The virtual meetup will start at:

- 16:00 (UTC+2) Paris time but also

- 7AM PST,

- 10AM CST,

- 11PM JST.

To have more information about connecting to the meetup, please register here: https://meetup.com/LightOn-meetup/events/273660363/

Follow @NuitBlog or join the CompressiveSensing Reddit, the Facebook page, the Compressive Sensing group on LinkedIn or the Advanced Matrix Factorization group on LinkedIn

Other links:

Saturday, October 10, 2020

As The World Turns: Implementations now on ArXiv thanks to Paper with Code

** Nuit Blanche is now on Twitter: @NuitBlog **

Follow @NuitBlog or join the CompressiveSensing Reddit, the Facebook page, the Compressive Sensing group on LinkedIn or the Advanced Matrix Factorization group on LinkedIn

Liked this entry ? subscribe to Nuit Blanche's feed, there's more where that came from. You can also subscribe to Nuit Blanche by Email.

Liked this entry ? subscribe to Nuit Blanche's feed, there's more where that came from. You can also subscribe to Nuit Blanche by Email.

Other links:

Paris Machine Learning: Meetup.com||@Archives||LinkedIn||Facebook|| @ParisMLGroup< br/> About LightOn: Newsletter ||@LightOnIO|| on LinkedIn || on CrunchBase || our Blog

About myself: LightOn || Google Scholar || LinkedIn ||@IgorCarron ||Homepage||ArXiv

It's the little things.

In the 2000s, after featuring good work on Nuit Blanche, I was usually following through by asking authors where their codes were. This is how the implementation tag was born. Some of the answers were along the lines of: "I didn't make it available because I thought it was not worthy". But what I usually responded was that, in effect, releasing one's code had a compounding effect on the community:

"You may not think it's worthy of release, but somehow, someone somewhere needs your code for reasons you cannot fathom"

As a result, I made a conscious choice of featuring those papers that were actively featuring their implementations. The earliest post with featured implementations was February 28th, 2007 with a blog post featuring three different implementations of reconstruction solver for compressed sensing. Yes, implementations were already available before that, but within the compressive sensing community, it was a point in time with a collective realization that releasing one's code would bring others to reuse one's work and advance the field as a result. At some point, I started making a long list of implementation available but got swamped after a while because it became, most of the time, the default behavior (a good thing).

Five years ago, Samim Winiger started GitXiv around Machine Learning papers. I was ecstatic but the site eventually stopped working. Two years ago, the Paper with code site started around the same issue and flourished. Congratulations to Robert, Ross, Marcin, Viktor, and Ludovic on starting a vibrant community around this need for listing papers with their attendant code. Two days ago, the next logical step occurred with the featuring of codes within ArXiv, a fantastic advance for Science. Woohoo!

My next question is:

When are they going to get a prize for this?

Follow @NuitBlog or join the CompressiveSensing Reddit, the Facebook page, the Compressive Sensing group on LinkedIn or the Advanced Matrix Factorization group on LinkedIn

Other links:

Paris Machine Learning: Meetup.com||@Archives||LinkedIn||Facebook|| @ParisMLGroup< br/> About LightOn: Newsletter ||@LightOnIO|| on LinkedIn || on CrunchBase || our Blog

About myself: LightOn || Google Scholar || LinkedIn ||@IgorCarron ||Homepage||ArXiv

Friday, May 29, 2020

Photonic Computing for Massively Parallel AI is out and it is spectacular!

It’s been a long time brewing but we just released our first white paper on Photonic Computing for Massively Parallel AI. The document features the technology we develop at LightOn, some of its use, some testimonials, and how we see the future of computing. It is downloadable here or from our website: LightOn.ai

Enjoy!

Follow @NuitBlog or join the CompressiveSensing Reddit, the Facebook page, the Compressive Sensing group on LinkedIn or the Advanced Matrix Factorization group on LinkedIn

Other links:

Paris Machine Learning: Meetup.com||@Archives||LinkedIn||Facebook|| @ParisMLGroup

Friday, May 15, 2020

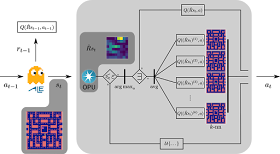

Tackling Reinforcement Learning with the Aurora OPU

** Nuit Blanche is now on Twitter: @NuitBlog **

Follow @NuitBlog or join the CompressiveSensing Reddit, the Facebook page, the Compressive Sensing group on LinkedIn or the Advanced Matrix Factorization group on LinkedIn

Liked this entry ? subscribe to Nuit Blanche's feed, there's more where that came from. You can also subscribe to Nuit Blanche by Email.

Liked this entry ? subscribe to Nuit Blanche's feed, there's more where that came from. You can also subscribe to Nuit Blanche by Email.

Other links:

Paris Machine Learning: Meetup.com||@Archives||LinkedIn||Facebook|| @ParisMLGroup

About LightOn: Newsletter ||@LightOnIO|| on LinkedIn || on CrunchBase || our Blog

About myself: LightOn || Google Scholar || LinkedIn ||@IgorCarron ||Homepage||ArXiv

Martin Graive did an internship at LightOn and decided to investigate how to use Random Projections in the context of Reinforcement Learning. He just wrote a blog post on the matter entitled "Tackling Reinforcement Learning with the Aurora OPU". The attendant GitHub repo is located here. Enjoy!

Follow @NuitBlog or join the CompressiveSensing Reddit, the Facebook page, the Compressive Sensing group on LinkedIn or the Advanced Matrix Factorization group on LinkedIn

Other links:

Paris Machine Learning: Meetup.com||@Archives||LinkedIn||Facebook|| @ParisMLGroup

About LightOn: Newsletter ||@LightOnIO|| on LinkedIn || on CrunchBase || our Blog

About myself: LightOn || Google Scholar || LinkedIn ||@IgorCarron ||Homepage||ArXiv

Wednesday, April 29, 2020

3-year PhD studentship in Inverse Problems and Optical Computing, LightOn, Paris, France

** Nuit Blanche is now on Twitter: @NuitBlog **

Come and join us at LightOn, we have a 3-year PhD fellowship available for someone who can help us build our future photonic cores. Here is

As part of the newly EU-funded ITN project “Post-Digital”, LightOn has an opening for a fully-funded 3 year Ph.D. studentship to join its R&D team, at the crossroads between Computer Science and Physics.

The goal of this 3 year Ph.D. position is to theoretically, numerically, and experimentally investigate how optimization techniques can be used in the design of hybrid computing pipelines, including a number of photonic building blocks (“photonic cores”). In particular, the optimized networks will be used to solve large-scale physics-based inverse problems in science and engineering - for instance in medical imaging (e.g. ultrasound), or simulation problems. The candidate will first investigate how LigthOn’s current range of photonics co-processors can be integrated within task-specific networks. The candidate will then develop a computational framework for the optimization of electro-optical systems. Finally, optimized systems will be built and evaluated on experimental data. This project will be part of LightOn’s internal THEIA project, aiming at automating the design of hybrid computing architectures, including combinations of LightOn’s photonic cores and traditional silicon chips.

In the framework of the EU funded ITN Post-Digital network, this project involves collaborations and 3-month secondments with two research groups led by:

- Daniel Brunner (Université Bourgogne Franche-Comté / FEMTO-ST Besançon), who will be the academic supervisor - The candidate will be registered as a Ph.D. student at UBFC.

- Pieter Bienstman (IMEC, Leuven, Belgium).

The supervisor at LightOn will be Laurent Daudet, CTO - currently on leave from his position of professor of physics at Université de Paris.

Due to the EU funding source, please make sure you comply with the mobility and eligibility rule before applying. Application: Position to be filled no later than Sept 1st, 2020.

Send your application with a CV to jobs@lighton.io with [Post-Digital PhD] in the subject line. Shortlisted applicants will be asked to provide references. This project has received funding from the European Union’s Horizon 2020 research and innovation program under the Marie Skłodowska-Curie grant agreement No 860830.

For more information: https://lighton.ai/careers/

Other links:

Paris Machine Learning: Meetup.com||@Archives||LinkedIn||Facebook|| @ParisMLGroup

About LightOn: Newsletter ||@LightOnIO|| on LinkedIn || on CrunchBase || our Blog

About myself: LightOn || Google Scholar || LinkedIn ||@IgorCarron ||Homepage||ArXiv

Tuesday, April 07, 2020

LightOn Cloud 2.0 featuring LightOn Aurora OPUs

** Nuit Blanche is now on Twitter: @NuitBlog **

Follow @NuitBlog or join the CompressiveSensing Reddit, the Facebook page, the Compressive Sensing group on LinkedIn or the Advanced Matrix Factorization group on LinkedIn

Liked this entry ? subscribe to Nuit Blanche's feed, there's more where that came from. You can also subscribe to Nuit Blanche by Email.

Liked this entry ? subscribe to Nuit Blanche's feed, there's more where that came from. You can also subscribe to Nuit Blanche by Email.

Other links:

Paris Machine Learning: Meetup.com||@Archives||LinkedIn||Facebook|| @ParisMLGroup

About LightOn: Newsletter ||@LightOnIO|| on LinkedIn || on CrunchBase || our Blog

About myself: LightOn || Google Scholar || LinkedIn ||@IgorCarron ||Homepage||ArXiv

At LightOn, we just launched LightOn Cloud 2.0 that feature several Aurora Optical Processing Unit for use by the Machine Learning Community. the blog post about this can be found here. You can request access to the Cloud at https://cloud.lighton.ai/

We are also having a LightOn Cloud for Research program: https://cloud.lighton.ai/lighton-research/

- [En] Press Release: LightOn launches LightOn Cloud 2.0 featuring Aurora OPUs, April 7th, 2020

- [Fr] Communiqué de presse: LightOn lance le LightOn Cloud 2.0 avec des OPUs Aurora, 7 Avril 2020

Follow @NuitBlog or join the CompressiveSensing Reddit, the Facebook page, the Compressive Sensing group on LinkedIn or the Advanced Matrix Factorization group on LinkedIn

Other links:

Paris Machine Learning: Meetup.com||@Archives||LinkedIn||Facebook|| @ParisMLGroup

About LightOn: Newsletter ||@LightOnIO|| on LinkedIn || on CrunchBase || our Blog

About myself: LightOn || Google Scholar || LinkedIn ||@IgorCarron ||Homepage||ArXiv

Thursday, March 26, 2020

Accelerating SARS-COv2 Molecular Dynamics Studies with Optical Random Features

** Nuit Blanche is now on Twitter: @NuitBlog **

Liked this entry ? subscribe to Nuit Blanche's feed, there's more where that came from. You can also subscribe to Nuit Blanche by Email.

Liked this entry ? subscribe to Nuit Blanche's feed, there's more where that came from. You can also subscribe to Nuit Blanche by Email.

We just published a new blog post at LightOn. This time, we used LightOn's Optical Processing Unit to show how our hardware can help in speeding up global sampling studies that are using Molecular Dynamics simulations, such as in the case of metadynamics. Our engineer, Amélie Chatelain wrote a blog post about it and it is here: Accelerating SARS-COv2 Molecular Dynamics Studies with Optical Random Features

We showed that LightOn's OPU, in tandem with the NEWMA algorithm, becomes very interesting (compared to CPU implementations of Random Fourier Features and FastFood) for simulations featuring more than 4 000 atoms.

Because building computational hardware makes no sense if we don't have a community that lifts us, the code used to generate the plots in that blog post is publicly available at the following link: https://github.com/lightonai/newma-md.

Follow @NuitBlog or join the CompressiveSensing Reddit, the Facebook page, the Compressive Sensing group on LinkedIn or the Advanced Matrix Factorization group on LinkedIn

Other links:

Paris Machine Learning: Meetup.com||@Archives||LinkedIn||Facebook|| @ParisMLGroup< br/>

About LightOn: Newsletter ||@LightOnIO|| on LinkedIn || on CrunchBase || our Blog

Saturday, March 14, 2020

Au Revoir Backprop ! Bonjour Optical Transfer Learning !

** Nuit Blanche is now on Twitter: @NuitBlog **

Follow @NuitBlog or join the CompressiveSensing Reddit, the Facebook page, the Compressive Sensing group on LinkedIn or the Advanced Matrix Factorization group on LinkedIn

Liked this entry ? subscribe to Nuit Blanche's feed, there's more where that came from. You can also subscribe to Nuit Blanche by Email.

Liked this entry ? subscribe to Nuit Blanche's feed, there's more where that came from. You can also subscribe to Nuit Blanche by Email.

Other links:

Paris Machine Learning: Meetup.com||@Archives||LinkedIn||Facebook|| @ParisMLGroup< br/> About LightOn: Newsletter ||@LightOnIO|| on LinkedIn || on CrunchBase || our Blog

About myself: LightOn || Google Scholar || LinkedIn ||@IgorCarron ||Homepage||ArXiv

We recently used LightOn's Optical Processing Unit to show how our hardware fared in the context of Transfer learning. Our engineer, Luca Tommasone wrote a blog post about it and it is here: Au Revoir Backprop! Bonjour Optical Transfer Learning!

Because building computational hardware makes no sense if we don't have a community that lifts us, the code used to generate the plots in that blog post is publicly available at the following link: https://github.com/lightonai/transfer-learning-opu.

Enjoy and most importantly stay safe !

Follow @NuitBlog or join the CompressiveSensing Reddit, the Facebook page, the Compressive Sensing group on LinkedIn or the Advanced Matrix Factorization group on LinkedIn

Other links:

Paris Machine Learning: Meetup.com||@Archives||LinkedIn||Facebook|| @ParisMLGroup< br/> About LightOn: Newsletter ||@LightOnIO|| on LinkedIn || on CrunchBase || our Blog

About myself: LightOn || Google Scholar || LinkedIn ||@IgorCarron ||Homepage||ArXiv

Wednesday, January 15, 2020

Beyond Overfitting and Beyond Silicon: The double descent curve

** Nuit Blanche is now on Twitter: @NuitBlog **

Two days ago, Becca Willett was talking on the same subject at the Turing Institute in London.

Attendant preprint is here:

A Function Space View of Bounded Norm Infinite Width ReLU Nets: The Multivariate Case by Greg Ongie, Rebecca Willett, Daniel Soudry, Nathan Srebro

Follow @NuitBlog or join the CompressiveSensing Reddit, the Facebook page, the Compressive Sensing group on LinkedIn or the Advanced Matrix Factorization group on LinkedIn

Liked this entry ? subscribe to Nuit Blanche's feed, there's more where that came from. You can also subscribe to Nuit Blanche by Email.

Liked this entry ? subscribe to Nuit Blanche's feed, there's more where that came from. You can also subscribe to Nuit Blanche by Email.

Other links:

Paris Machine Learning: Meetup.com||@Archives||LinkedIn||Facebook|| @ParisMLGroup< br/> About LightOn: Newsletter ||@LightOnIO|| on LinkedIn || on CrunchBase || our Blog

About myself: LightOn || Google Scholar || LinkedIn ||@IgorCarron ||Homepage||ArXiv

We recently tried a small experiment with LightOn's Optical Processing Unit on the issue of generalization. Our engineer, Alessandro Cappelli, did the experiment and wrote a blog post on it and it is here: Beyond Overfitting and Beyond Silicon: The double descent curve

A function space view of overparameterized neural networks Rebecca Willett.

A Function Space View of Bounded Norm Infinite Width ReLU Nets: The Multivariate Case by Greg Ongie, Rebecca Willett, Daniel Soudry, Nathan Srebro

A key element of understanding the efficacy of overparameterized neural networks is characterizing how they represent functions as the number of weights in the network approaches infinity. In this paper, we characterize the norm required to realize a functionf:Rd→R as a single hidden-layer ReLU network with an unbounded number of units (infinite width), but where the Euclidean norm of the weights is bounded, including precisely characterizing which functions can be realized with finite norm. This was settled for univariate univariate functions in Savarese et al. (2019), where it was shown that the required norm is determined by the L1-norm of the second derivative of the function. We extend the characterization to multivariate functions (i.e., networks with d input units), relating the required norm to the L1-norm of the Radon transform of a (d+1)/2-power Laplacian of the function. This characterization allows us to show that all functions in Sobolev spacesWs,1(R) ,s≥d+1 , can be represented with bounded norm, to calculate the required norm for several specific functions, and to obtain a depth separation result. These results have important implications for understanding generalization performance and the distinction between neural networks and more traditional kernel learning.

Follow @NuitBlog or join the CompressiveSensing Reddit, the Facebook page, the Compressive Sensing group on LinkedIn or the Advanced Matrix Factorization group on LinkedIn

Other links:

Paris Machine Learning: Meetup.com||@Archives||LinkedIn||Facebook|| @ParisMLGroup< br/> About LightOn: Newsletter ||@LightOnIO|| on LinkedIn || on CrunchBase || our Blog

About myself: LightOn || Google Scholar || LinkedIn ||@IgorCarron ||Homepage||ArXiv