All the ICLR 2017 submissions are under open review, here are two papers submitted to the conference and related to the generic theme of mapping ML algorithms to Hardware:

Ternary Weight Decomposition and Binary Activation Encoding for Fast and Compact Neural Network by Mitsuru Ambai & Takuya Matsumoto, Takayoshi Yamashita & Hironobu Fujiyoshi

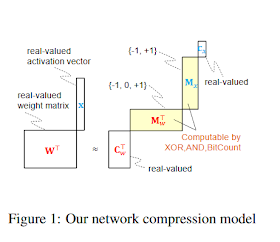

This paper aims to reduce test-time computational load of a deep neural network. Unlike previous methods which factorize a weight matrix into multiple real-valued matrices, our method factorizes both weights and activations into integer and non-integer components. In our method, the real-valued weight matrix is approximated by a multiplication of a ternary matrix and a real-valued co-efficient matrix. Since the ternary matrix consists of three integer values, -1, 0, 1 it only consumes 2 bits per element. At test-time, an activation vector that passed from a previous layer is also transformed into a weighted sum of binary vectors, -1, 1, which enables fast feed-forward propagation based on simple logical operations: AND, XOR, and bit count. This makes it easier to deploy a deep network on low-power CPUs or to design specialized hardware.Sparsely Connected Neural Networks: Towards Efficient VLSI Implementation of Deep Neural Networks by Arash Ardakani, Carlo Condo and Warren J. Gross

In our experiments, we tested our method on three different networks: a CNN for handwritten digits, VGG-16 model for ImageNet classification, and VGG-Face for large-scale face recognition. In particular, when we applied our method to three fully connected layers in the VGG-16, 15x acceleration and memory compression up to 5:2% were achieved with only a 1:43% increase in the top-5 error. Our experiments also revealed that compressing convolutional layers can accelerate inference of the entire network in exchange of slight increase in error.

Recently deep neural networks have received considerable attention due to their ability to extract and represent high-level abstractions in data sets. Deep neural networks such as fully-connected and convolutional neural networks have shown excellent performance on a wide range of recognition and classification tasks. However, their hardware implementations currently suffer from large silicon area and high power consumption due to the their high degree of complexity. The power/energy consumption of neural networks is dominated by memory accesses, the majority of which occur in fully-connected networks. In fact, they contain most of the deep neural network parameters. In this paper, we propose sparsely-connected networks, by showing that the number of connections in fully-connected networks can be reduced by up to 90% while improving the accuracy performance on three popular datasets (MNIST, CIFAR10 and SVHN). We then propose an efficient hardware architecture based on linear-feedback shift registers to reduce the memory requirements of the proposed sparsely-connected networks. The proposed architecture can save up to 90% of memory compared to the conventional implementations of fully-connected neural networks. Moreover, implementation results show up to 84% reduction in the energy consumption of a single neuron of the proposed sparsely-connected networks compared to a single neuron of fully-connected neural networks.

f

No comments:

Post a Comment