Here is an interesting bit where random features are used to approximate control policy modeling in reinforcement learning and deep auto-encoders are used to figure out the latent dimension of the model.

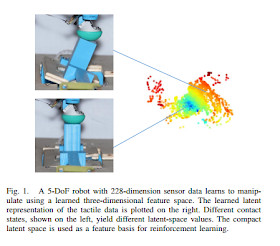

For many tasks, tactile or visual feedback is helpful or even crucial. However, designing controllers that take such high-dimensional feedback into account is non-trivial. Therefore, robots should be able to learn tactile skills through trial and error by using reinforcement learning algorithms. The input domain for such tasks, however, might include strongly correlated or non-relevant dimensions, making it hard to specify a suitable metric on such domains. Auto-encoders specialize in finding compact representations, where defining such a metric is likely to be easier. Therefore, we propose a reinforcement learning algorithm that can learn non-linear policies in continuous state spaces, which leverages representations learned using auto-encoders. We first evaluate this method on a simulated toytask with visual input. Then, we validate our approach on a real-robot tactile stabilization task.

No comments:

Post a Comment