One of the co-author of the following preprint, Leslie Greengard, is one of the authors of the Fast Multipole Method, a matrix factorization method that changed many fields when it appeared. This factorization uses the low rankedness of blocks of dense matrices. I wonder if there are matrices where it would be useful to use a robust PCA on these blocks.

Fast Direct Methods for Gaussian Processes and the Analysis of NASA Kepler Mission Data by Sivaram Ambikasaran, Daniel Foreman-Mackey, Leslie Greengard, David W. Hogg, Michael O'Neil

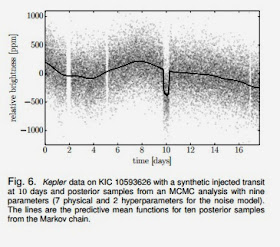

A number of problems in probability and statistics can be addressed using the multivariate normal (or multivariate Gaussian) distribution. In the one-dimensional case, computing the probability for a given mean and variance simply requires the evaluation of the corresponding Gaussian density. In the $n$-dimensional setting, however, it requires the inversion of an $n \times n$ covariance matrix, $C$, as well as the evaluation of its determinant, $\det(C)$. In many cases, the covariance matrix is of the form $C = \sigma^2 I + K$, where $K$ is computed using a specified kernel, which depends on the data and additional parameters (called hyperparameters in Gaussian process computations). The matrix $C$ is typically dense, causing standard direct methods for inversion and determinant evaluation to require $\mathcal O(n^3)$ work. This cost is prohibitive for large-scale modeling. Here, we show that for the most commonly used covariance functions, the matrix $C$ can be hierarchically factored into a product of block low-rank updates of the identity matrix, yielding an $\mathcal O (n\log^2 n) $ algorithm for inversion, as discussed in Ambikasaran and Darve, $2013$. More importantly, we show that this factorization enables the evaluation of the determinant $\det(C)$, permitting the direct calculation of probabilities in high dimensions under fairly broad assumption about the kernel defining $K$. Our fast algorithm brings many problems in marginalization and the adaptation of hyperparameters within practical reach using a single CPU core. The combination of nearly optimal scaling in terms of problem size with high-performance computing resources will permit the modeling of previously intractable problems. We illustrate the performance of the scheme on standard covariance kernels, and apply it to a real data set obtained from the $Kepler$ Mission.

From [1]

from the paper:

The source code for the algorithm has been made available on GitHub. The HODLR package for solving linear systems and computing determinants is available at https://github.com/sivaramambikasaran/ HODLR [3] and the Python Gaussian process package [18], george, has been made available at https://github.com/dfm/george.

No comments:

Post a Comment