Back in 2004, Emmanuel Candes, Justin Romberg and Terry Tao put out a paper [4] (in parallel with the one of Dave Donoho) that started the whole compressive sensing adventure [4,5, 24]. From there on, adding an a priori knowledge (sparsity) on a solution to an underdetermined system of linear equations provided enough constraints to choose easily a solution out of an infinite number of them. By "easy" one actually means that a solution was found for a set of problems that used to require an exponential number of computations. In that same paper, a figure showed quite clearly that the computability of the reconstruction of the solution hinged on some intrinsic parameter of the system of equations (size of the linear system and sparsity of the solution) [4].

About three years later to the day, Ben Recht, Maryam Fazel, and Pablo Parrilo [25,26], showed that this empirical phase transition phenomena was also at play for higher dimensional objects such as matrices.

About a year later, as I was quietly enjoying a meeting on Nonlinear Approximation Techniques Using L1 in Aggieland, I nearly spilled my coffee during Jared's presentation. I had to ask:

You did what ? ... you mean to say you included the RIP(1) measurement matrices of Indyk et al and they also fall on the same phase transition line ?

A pointed "Yes" was the answer.

I think I said a loud wow. It was May 2008, the Texas weather was destined to be hot. Ever since that day, I have called it the Donoho-Tanner phase transition. The significance of this line of investigation [1] was only beginning to sink in. Let me desribe the landscape. For about four years since [4,5], the only solid admissibility conditions for sparse recovery of underdetermined linear system was a tool called restricted isometry property or RIP for short. At that meeting, Yin Zhang [2] had shown us that RIP was not really the only property to go about, but now, there seemed to be a whole lot more admissible measurement ensembles that could do the trick and were not part of the RIP camp. Not only that but the phase transitions being sharp were becoming a natural "acid test" for the numerous combination of measurement matrices and solvers used in sparse recovery. It was also probably the first time that practitionners far removed from the usual mathematical fray could begin to care about P vs NP

Suddenly, it became easy to figure out the influence of noise [18] and how it would affect potentiel sensor/hardware:

or provide rule of thumbs in genomics [17]

or devise hardware modification for improving data acquisition [29]

one could also point right through the failings and the sometimes low quality of peer review [8,9,10]

or help in comparing if new measurement matrices were good enough [30]

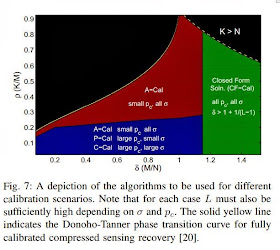

or help in devising better calibration algorithms for sensor devices [34]

or provide regions of interest of further improvement for specific solvers in case of noise [36]

or show when solvers other than L1 were doing better than L1 solvers [41]

eventually, it also helped in figuring out when something very new came up [11] :

From [11]

From [13] [14] [15]

In other words, in compressive sensing, these phase transitions maps were used as navigational instruments. And this was just the beginning.

What about Matrix Factorizations, do we have the same trend for higher dimensional objects such as matrices ?

Yes, much like [1], [19] showed that some sort of universality for a certain low rank matrix recovery operations. But it goes deeper as new results by Joel Tropp and Michael McCoy show a connexion between high dimensional geometry and convex optimization [7, 32,33]:

those new phase transitions could now be used to compare an various assortments of different matrix factorizations algorithms [7] such as matrix completion, robust PCA, and dictionary learning. MMV has recently been looked into.

What about NMF, a mainstay of science and engineering since 2000 ? well, let's just say, an innocuous statement made by Gabriel Peyre in 2008 still haunts me [35]. There is certainly some new results in that realm [38], and, in my view, they seem to point to similar issues at least in 1D:

but why stop there ? there is the unexplored case of tensors. Many different models for the human visual systems could probably be evaluated through this acid test. What about evaluating the whole spectrum of sensing modalities all the way to machine learning [39] ? Could the Grothendieck's Theorem [40] enlarge the set of problems with this sharp phase transition property ?

The world just got bigger

[1] Observed universality of phase transitions in high-dimensional geometry, with implications for modern data analysis and signal processing by Jared Tanner and David L. Donoho

[3] Meeting on Nonlinear Approximation Techniques Using L1 organized by Jean-Luc Guermond, Bojan Popov and Ron DeVore

[4] Robust Uncertainty Principles:Exact Signal Reconstruction from Highly Incomplete Frequency Information Emmanuel Candes, Justin Romberg, and Terence Tao. IEEE Trans. Inform. Theory, 52 489-509.

[5] Compressed Sensing, Information Theory, IEEE Transactions on Information Theory, April 2006, Donoho, D.L.

[6]BiG-AMP: Bilinear Generalized Approximate Message Passing, Jason T. Parker, Philip Schniter, Volkan Cevher

[7] The achievable performance of convex demixing, Convexity in source separation: Models, geometry, and algorithms Michael B. McCoy and Joel A. Tropp

[12] Exact Matrix Completion via Convex Optimization, Emmanuel J. Candes and Benjamin Recht

[14] Information-Theoretically Optimal Compressed Sensing via Spatial Coupling and Approximate Message Passing by David Donoho, Adel Javanmard and Andrea Montanari.

[15] Pushing the Boundaries in Compressive Sensing Accurate Prediction of Phase Transitions in Compressed Sensing via a Connection to Minimax Denoising by David Donoho, Iain Johnstone, Andrea Montanari.

[17] Compressed sensing and genomes and on Nuit Blanche ( Application of compressed sensing to genome wide association studies and genomic selection )

[18] Compressive Radar Imaging Using White Stochastic Waveforms by Mahesh C. Shastry, Ram M. Narayanan and Muralidhar Rangaswamy [Published in 2010]

[23] Sunday Morning Inisght: The 200 year gap

[25] Guaranteed Minimum-Rank Solutions of Linear Matrix Equations via Nuclear Norm Minimization by Benjamin Recht, Maryam Fazel, Pablo A. Parrilo

[27] Compressive Radar Imaging Using White Stochastic Waveforms by Mahesh C. Shastry, Ram M. Narayanan and Muralidhar Rangaswamy.

[28] Model Selection with Many More Variables than Observations, presentation by Victoria Stodden

[29] Universal and efficient compressed sensing by spread spectrum and application to realistic Fourier imaging techniques by Gilles Puy, Pierre Vandergheynst, Rémi Gribonval, Yves Wiaux.

[36] Performance Regions in Compressed Sensing from Noisy Measurements byJunan Zhu and Dror Baron (see also Sunday Morning Insight: Phase Transitions and Eigen-gaps as Roadmaps )

[38] Robust Near-Separable Nonnegative Matrix Factorization Using Linear Optimization - implementation -

[39] Sunday Morning Insight: A Quick Panorama of Sensing from Direct Imaging to Machine Learning [40] Grothendieck's Theorem, past and present, Gilles Pisier

[41] An Empirical-Bayes Approach to Recovering Linearly Constrained Non-Negative Sparse Signals

-countmin10-plus_minus_one_peaks.jpg)

Is SL0 still at the top of the heap of phase transitions? Did it hold up under scrutiny? I thought these optimization approaches were supposed to be not as good as the AMP type stuff.

ReplyDeleteThe main difference between AMP and SL0 is that AMP is much less complex (a few matrix-vector multiply) as opposed to an SVD for SL0. The point about SL0 was really was that it had been rejected from publications when in fact it did better than quite a few other solvers (before AMP). The second point was that a more robust version of SL0 against noise did not see the light of the day because of the initial rejection of the SL0 paper. Finally, there was recently a paper on an improvement of SL0 called SL1 or SL0-mod that did improve further the phase transition of the original SL0 although not to the extent of GAMP of Phil Schniter et al. Hope this helps,

ReplyDeleteIgor.

SL0 is definitely not at the top of the heap w.r.t phase transitions, but it is one of the better algorithms for the student-t type of signals that come out of wavelet transforms and DCTs and such. For other signal types, however, it can perform quite poorly.

ReplyDeleteIn terms of speed, SL0 is pretty fast, but it is not as fast as greedy methods for small problems, nor as fast as first-order algorithms (FISTA, SPGL1, AMP, etc.) for large problems because of its complexity scaling.

The preprint http://arxiv.org/abs/1207.3107 has numerical evidence of these claims.

Thank you Anonymous that was very thorough. As a matter of note, the iinitial issue was really about the fact that SL0 got rejected for publication when in fact it would perform better than a few type other solvers . The point being that the phase transition is really the only acid test here as the paper, you mention, shows.

ReplyDeleteIgor.