Here is something interesting not far from the Random Features / Random Kitchen Sinks approach (see the recent Fast Functions via Randomized Algorithms: Fastfood versus Random Kitchen Sinks ). The comparison to Kitchen Sinks is actually mentioned in 5.3. Let us also note the Johnson-Lindenstrauss Lemma for Gaussian Objects in 3.3. Of related interest are [1] and the recent [2].

Linear Regression with Random Projections by  Odalric Maillard, Rémi Munos. The abstract reads:

Odalric Maillard, Rémi Munos. The abstract reads:

Join the CompressiveSensing subreddit or the Google+ Community and post there !

Liked this entry ? subscribe to Nuit Blanche's feed, there's more where that came from. You can also subscribe to Nuit Blanche by Email, explore the Big Picture in Compressive Sensing or the Matrix Factorization Jungle and join the conversations on compressive sensing, advanced matrix factorization and calibration issues on Linkedin.

Liked this entry ? subscribe to Nuit Blanche's feed, there's more where that came from. You can also subscribe to Nuit Blanche by Email, explore the Big Picture in Compressive Sensing or the Matrix Factorization Jungle and join the conversations on compressive sensing, advanced matrix factorization and calibration issues on Linkedin.

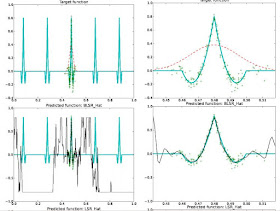

We investigate a method for regression that makes use of a randomly generated subspace $G_P$ (of finite dimension $P$) of a given large (possibly infinite) dimensional function space $F$, for example, $L_{2}([0,1]^d)$. $G_P$ is defined as the span of $P$ random features that are linear combinations of a basis functions of $F$ weighted by random Gaussian i.i.d.~coefficients. We show practical motivation for the use of this approach, detail the link that this random projections method share with RKHS and Gaussian objects theory and prove, both in deterministic and random design, approximation error bounds when searching for the best regression function in $G_P$ rather than in $F$, and derive excess risk bounds for a specific regression algorithm (least squares regression in $G_P$). This paper stresses the motivation to study such methods, thus the analysis developed is kept simple for explanations purpose and leaves room for future developments.

[1] Uniform Approximation of Functions with Random Bases, Ali Rahimi and Benjamin Recht

[2] Nystrom Method vs Random Fourier Features:: A Theoretical and Empirical Comparison Tianbao Yang, Yu-Feng Li, Mehrdad Mahdavi, Rong Jin, Zhi-Hua Zhou

No comments:

Post a Comment