As you probably recall (see here), one of the main issue with the Random Lens Imager, a system I consider to be a truly innovative Compressive Sensing system, is the amount of time spent in the calibration process. For what it's worth, I note that it's been nearly three years now since the report has been out but has, to my limited knowledge, not been published. Which makes me wonder sometimes about how truly innovative approaches have real difficulty making it into the literature.

In a presentation by Yair Rivenson and Adrian Stern on An Efficient Method for Multi-Dimensional Compressive Imaging, one can see the problem being expressed in plain words:

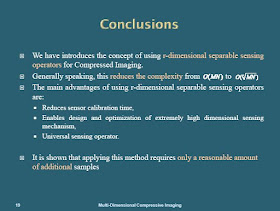

and how their approach (using separable sensing systems) can reduce that burden. The price to pay for not spending years in the calibration process seems to be more than a fair one:

The authors also want to use that type of approaches for r-dimensional signals where r is above 2.

A more in-depth explanation of the presentation's results can be found in Compressed Imaging with a Separable Sensing Operator by Yair Rivenson and Adrian Stern. The abstract reads:

Compressive imaging (CI) is a natural branch of compressed sensing (CS). Although a number of CI implementations have started to appear, the design of efficient CI system still remains a challenging problem. One of the main difficulties in implementing CI is that it involves huge amounts of data, which has far-reaching implications for the complexity of the optical design, calibration, data storage and computational burden. In this paper, we solve these problems by using a twodimensional separable sensing operator. By so doing, we reduce the complexity by factor of 10^6 for megapixel images. We show that applying this method requires only a reasonable amount of additional samples.

Let us recall that we have already seen this approach before ( see the work of Yin Zhang and others like Thong Do and John Sidles (see comments)) but it certainly is a good thing to now see the minimal price to be paid in exchange of this Kronecker product approach to imaging and other systems.

There are really two other ways to reduce that calibration burden of finding what the point spread function (or transfer function for higher dimensional problems) is: One could make the assumption that the measurement matrix is sparse and evaluate this issue as a compressed sensing problem called Blind Deconvolution or use a physical system that implements a sparse measurement system (RIP-1 matrices) such as in the new paper: Expander-based Compressed Sensing in the presence of Poisson Noise by Sina Jafarpour, Rebecca Willett, Maxim Raginsky, Robert Calderbank. The abstract reads:

This paper provides performance bounds for compressed sensing in the presence of Poisson noise using expander graphs. The Poisson noise model is appropriate for a variety of applications, including low-light imaging and digital streaming, where the signal-independent and/or bounded noise models used in the compressed sensing literature are no longer applicable. In this paper, we develop a novel sensing paradigm based on expander graphs and propose a MAP algorithm for recovering sparse or compressible signals from Poisson observations. The geometry of the expander graphs and the positivity of the corresponding sensing matrices play a crucial role in establishing the bounds on the signal reconstruction error of the proposed algorithm. The geometry of the expander graphs makes them provably superior to random dense sensing matrices, such as Gaussian or partial Fourier ensembles, for the Poisson noise model. We support our results with experimental demonstrations.

No comments:

Post a Comment