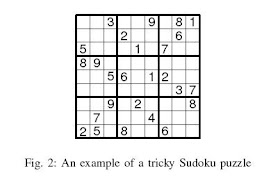

Two items first. In yesterday's entry on the Sudoku paper ( Linear Systems, Sparse Solutions, and Sudoku by Prabhu Babu, Kristiaan Pelckmans, Petre Stoica, and Jian Li) it is interesting to find out that a re-weighted scheme seems to be doing better than some game specific greedy algorithm. Furthermore, at the end of the paper, we can read the following:

Two items first. In yesterday's entry on the Sudoku paper ( Linear Systems, Sparse Solutions, and Sudoku by Prabhu Babu, Kristiaan Pelckmans, Petre Stoica, and Jian Li) it is interesting to find out that a re-weighted scheme seems to be doing better than some game specific greedy algorithm. Furthermore, at the end of the paper, we can read the following:The presently known sufficient conditions on A for checking the equivalence of P0 and P1, like the restricted isometry property (RIP) [5] and Kashin, Garnaev, Gluskin (KGG) inequality [6], do not hold for Sudoku. So analyzing the equivalence of l0 and l1 norm solutions in the context of Sudoku requires novel conditions for sparse recovery.

I am not sure that RIP of [5] is the condition to check for here, (RIP-2) more likely the sparsity of the measurement matrix implies some type of RIP-1 condition. I also thought KGG was a necessary and sufficient condition albeit an NP-Hard at that. Other conditions can be found on the Compressive Sensing Big Picture page.

In the super-resolution paper mentioned earlier this week, entitled Super-resolution ghost imaging via compressive sampling reconstruction by Wenlin Gong and Shensheng Han, Wenlin confirms to me that only l1_magic was used not GPSR as the reference would tend to indicate. I am sure we are going to see some improvement in the near future. Other reconstruction solvers can be found in the Compressive Sensing Big Picture page too.

David Gross just sent me the following:

...I'm a physicist and new to the field. Originally, some colleagues and me got interested in compressed sensing and matrix completion in the context of quantum state tomography (meaning the experimental characterization of a quantum system). Our paper arXiv:0909.3304 was mentioned on your blog.

The methods we needed to develop in order to translate known results on matrix completion by Candes, Recht, Tao and others to quantum mechanics proved far more general than anticipated. We can now show that a low-rank (n x n)-matrix may be recovered from basically O(n r log^2 n) randomly selected expansion coefficients with respect to any matrix basis. The matrix completion problem is just a special case.

Most importantly, though, the complexity of the proofs was reduced substantially. The spectacular argument by Candes and Tao in arXiv:0903.1476 covered about 40 pages. The new paper implies these results, but is much simpler to digest.

The first version of the paper went onto the arxiv two weeks ago: arXiv:0910.1879. However, it significantly evolved since then. In some cases the bounds are now down to O(n r log n), which -- I'm happy to say -- is tight up to multiplicative factors.

There is an obvious challenge to be met. The O(n r log n)-bounds do not currently extend to the original matrix completion problem. They come tantalizingly close, though, with only one single lemma obstructing a more general result. The precise problem is pointed out at the end of Section III B.

Before submitting the paper, I plan to add a final note. The statements all continue to be true if instead of a matrix basis a "tight frame" (a.k.a. "overcomplete basis") is used.

I should also point out Benjamin Recht's recent and strongly related pre-print arXiv:0910.0651 to you. He independently translated some of the results in our earlier paper on quantum tomography to the matrix completion problem (apparently overlooking our announcement of exactly the same result in the physics paper).

Let us note that the last preprint of Benjamin Recht was recently updated. But first, here is the new revision entitled: Recovering low-rank matrices from few coefficients in any basis by David Gross

We establish novel techniques for analyzing the problem of low-rank matrix recovery. The methods are both considerably simpler, and more general than previous approaches. It is shown that an unknown n × n matrix of rank r can be efficiently reconstructed given knowledge of only O(nr \nu log2 n) randomly sampled expansion coefficients with respect to any given matrix basis. The number \nu quantifies the “degree of incoherence” between the unknown matrix and the basis. Existing work concentrated mostly on the problem of “matrix completion”, where one aims to recover a low-rank matrix from randomly selected matrix elements. Our result covers this situation as a special case. The proof consists of a series of relatively elementary steps, which stands in contrast to the highly involved methods previously employed to obtain comparable results. In cases where bounds had been known before, our estimates are slightly tighter. We discuss operator bases which are incoherent to all low-rank matrices simultaneously. For these bases, we show that O(nr \nu log n) randomly sampled expansion coefficients suffice to recover any low-rank matrix with high probability. The latter bound is tight up to multiplicative factors. This seems to be the first time the optimal order of the log-factor has been proved to be achievable for a matrix reconstruction problem where neither the basis nor the unknown matrix is randomized. This work is an expanded presentation of the recent pre-print [D. Gross, Y.-K. Liu, S. T. Flammia, S. Becker, and J. Eisert, arxiv:0909.3304] which was aimed at researches working in quantum information theory.

I also found the following: Probability of Unique Integer Solution to a System of Linear Equations by Olvi Mangasarian and Benjamin Recht. The abstract reads:

We consider a system of m linear equations in n variables Ax = d and give necessary and sufficient conditions for the existence of a unique solution to the system that is integer: x element of {−1,1}^n. We achieve this by reformulating the problem as a linear program and deriving necessary and sufficient conditions for the integer solution to be the unique primal optimal solution. We show that as long as m is larger than n/2, then the linear programming heuristic succeeds for most instances, but if m is less than n/2, the heuristic fails on most instances. We also demonstrate that these predictions match the empirical performance of the linear programming formulation to very high accuracy.

Enjoy!

Credit: NASA / GSFC / ASU, Via The Planetary Society Blog: Apollo 17 lander and flag! An image of the Apollo 17 lander, Challenger, captured by the Lunar Reconnaissance Orbiter Camera on October 1, 2009, includes not only the lander and astronaut tracks but also a fuzzy dark pixel at the location of the American flag erected there by astronauts Jack Schmitt and Gene Cernan.