From the conferences featured last week, here two additional papers:

Regularized Dictionary Learning for Sparse Approximation by Mehrdad Yaghoobi-Vaighan, Thomas Blumensath and Mike Davies. The abstract reads:

Sparse signal models approximate signals using a small number of elements from a large set of vectors, called a dictionary. The success of such methods relies on the dictionary fitting the signal structure. Therefore, the dictionary has to be designed to fit the signal class of interest. This paper uses a general formulation that allows the dictionary to be learned form the data with some a priori information about the dictionary. In this formulation a universal cost function is proposed and practical algorithms are presented to minimize this cost under different constraints on the dictionary. The proposed methods are compared with previous approaches using synthetic and real data. Simulations highlight the advantages of the proposed methods over other currently available dictionary learning strategies.

This article proposes a new framework to regularize linear inverse problems using the total variation on non-local graphs. This nonlocal graph allows to adapt the penalization to the geometry of the underlying function to recover. A fast algorithm computes iteratively both the solution of the regularization process and the non-local graph adapted to this solution. We show numerical applications of this method to the resolution of image processing inverse problems such as inpainting, super-resolution and compressive sampling.

We consider the conjectured O(N2+\epsilon) time complexity of multiplying any two N × N matrices A and B. Our main result is a deterministic Compressed Sensing (CS) algorithm that both rapidly and accurately computes A · B provided that the resulting matrix product is sparse/compressible. As a consequence of our main result we increase the class of matrices A, for any given N × N matrix B, which allows the exact computation of A·B to be carried out using the conjectured O(N2+\epsilon) operations. Additionally, in the process of developing our matrix multiplication procedure, we present a modified version of Indyk’s recently proposed extractor-based CS algorithm [16] which is resilient to noise.

From the Rice Compressed Sensing repository, we also have new papers:

Discriminative learned dictionaries for local image analysis by Julien Mairal, Francis Bach, Jean Ponce, Guillermo Sapiro, and Andrew Zisserman. The abtract reads:

Sparse signal models have been the focus of much recent research, leading to (or improving upon) state-of-the-art results in signal, image, and video restoration. This article extends this line of research into a novel framework for local image discrimination tasks, proposing an energy formulation with both sparse reconstruction and class discrimination components, jointly optimized during dictionary learning. This approach improves over the state of the art in texture segmentation experiments using the Brodatz database, and it paves the way for a novel scene analysis and recognition framework based on simultaneously learning discriminative and reconstructive dictionaries. Preliminary results in this direction using examples from the Pascal VOC06 and Graz02 datasets are presented as well.

Sparse representations for image classification: Learning discriminative and reconstructive non-parametric dictionaries by Fernando Rodriguez and Guillermo Sapiro. The abstract reads:

A framework for learning optimal dictionaries for simultaneous sparse signal representation and robust class classi cation is introduced in this paper. This problem for dictionary learning is solved by a class-dependent supervised simultaneous orthogonal matching pursuit, which learns the intra-class structure while increasing the inter-class discrimination, interleaved with an efficient dictionary update obtained via singular value decomposition. This framework addresses for the first time the explicit incorporation of both reconstruction and discrimination terms in the non-parametric dictionary learning and sparse coding energy. The work contributes to the understanding of the importance of learned sparse representations for signal classi cation, showing the relevance of learning discriminative and at the same time reconstructive dictionaries in order to achieve accurate and robust classi cation. The presentation of the underlying theory is complemented with examples with the standard MNIST and Caltech datasets, and results on the use of the sparse representation obtained from the learned dictionaries as local patch descriptors, replacing commonly used experimental ones.

There is also:

- J. Mairal, F. Bach, J. Ponce, G. Sapiro and A. Zisserman. Discriminative Learned Dictionaries for Local Image Analysis. IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, Alaska, USA, 2008.

- J. Mairal, G. Sapiro and M. Elad. Multiscale sparse image representation with learned dictionaries. In IEEE International Conference on Image Processing, San Antonio, Texas, USA, 2007. Oral presentation: slides

and soem convergence results for reweighted Lp algorithms by Ingrid Daubechies, Ronald DeVore, Massimo Fornasier, and C. Sinan Güntürk entitled:Iteratively re-weighted least squares minimization for sparse recovery. The abstract reads:

Under certain conditions (known as the Restricted Isometry Property or RIP) on the m×N matrix \Phi (where m less than N), vectors x element of R^N that are sparse (i.e. have most of their entries equal to zero) can be recovered exactly from y := \Phi x even though \Phi^−1(y) is typically an (N − m)- dimensional hyperplane; in addition x is then equal to the element in \Phi^−1(y) of minimal ℓ1-norm. This minimal element can be identified via linear programming algorithms. We study an alternative method of determining x, as the limit of an Iteratively Re-weighted Least Squares (IRLS) algorithm. The main step of this IRLS finds, for a given weight vector w, the element in \Phi^−1(y) with smallest ℓ2(w)-norm. If x(n) is the solution at iteration step n, then the new weight w(n) is defined by w^(n)_ i := [|x^(n)_ i |^2 + \epsilon_n^2]^(−1/2) , i = 1, . . . ,N, for a decreasing sequence of adaptively defined ǫn; this updated weight is then used to obtain x(n+1) and the process is repeated. We prove that when \Phi satisfies the RIP conditions, the sequence x(n) converges for all y, regardless of whether \Phi^−1(y) contains a sparse vector. If there is a sparse vector in \Phi^−1(y), then the limit is this sparse vector, and when x(n) is sufficiently close to the limit, the remaining steps of the algorithm converge exponentially fast (linear convergence in the terminology of numerical optimization). The same algorithm with the “heavier” weight w^(n)_ i := [|x^(n)_ i |^2 +\epsilon_n^2]^(−1+ τ /2) , i = 1, . . . ,N, where τ is between 0 and 1, can recover sparse solutions as well; more importantly, we show its local convergence is superlinear and approaches a quadratic rate for τ approaching to zero.

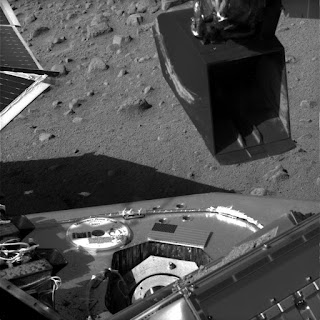

Credit: NASA/JPL-Caltech/University of Arizona/Texas A&M. Phoenix Sol 33.