The abstract reads:

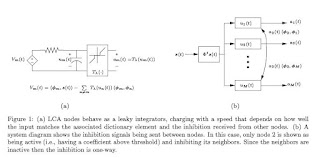

While evidence indicates that neural systems may be employing sparse approximations to represent sensed stimuli, the mechanisms underlying this ability are not understood. We describe a locally competitive algorithm (LCA) that solves a collection of sparse coding principles minimizing a weighted combination of mean-squared error (MSE) and a coefficient cost function. LCAs are designed to be implemented in a dynamical system composed of many neuron-like elements operating in parallel. These algorithms use thresholding functions to induce local (usually one-way) inhibitory competitions between nodes to produce sparse representations. LCAs produce coefficients with sparsity levels comparable to the most popular centralized sparse coding algorithms while being readily suited for neural implementation. Additionally, LCA coefficients for video sequences demonstrate inertial properties that are both qualitatively and quantitatively more regular (i.e., smoother and more predictable) than the coefficients produced by greedy algorithms.

This is a fascinating article. It ends with the equally intriguing :

This is a fascinating article. It ends with the equally intriguing :The current limitations of neurophysiological recording mean that exploring the sparse coding hypothesis must rely on testing specific proposed mechanisms. Though the LCAs we have proposed appear to map well to known neural architectures, they still lack the biophysical detail necessary to be experimentally testable. We will continue to build on this work by mapping these LCAs to a detailed neurobiological population coding model that can produce verifiable predictions. Furthermore, the combination of sparsity and regularity induced in LCA coefficients may serve as a critical front-end stimulus representation that enables visual perceptual tasks, including pattern recognition, source separation and object tracking. By using simple computational primitives, LCAs also have the benefit of being implementable in analog hardware. An imaging system using VLSI to implement LCAs as a data collection front end has the potential to be extremely fast and energy efficient. Instead of digitizing all of the sensed data and using digital hardware to run a compression algorithm, analog processing would compress the data into sparse coefficients before digitization. In this system, time and energy resources would only be spent digitizing coefficients that are a critical component in the signal representation.Eventually, I wonder how this greedy encoding algorithm would handle the hierarchical nature of the brain.

Reference:

[1] Emergence of Simple-Cell Receptive Field Properties by Learning a Sparse Code for Natural Images, Olshausen BA, Field DJ (1996). Nature, 381: 607-609.

Hi Igor,

ReplyDeleteThere is a recent, related Nature article by Diego A. Gutnisky and Valentin Dragoi, Adaptive coding of visual information in neural populations, p220, Volume 452 Number 7184, 13 March 2008.

Thanks Doug.

ReplyDeleteI'll check it out.

Igor.