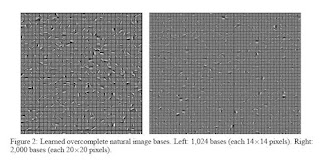

Sparse coding provides a class of algorithms for finding succinct representations of stimuli; given only unlabeled input data, it discovers basis functions that capture higher-level features in the data. However, finding sparse codes remains a very difficult computational problem. In this paper, we present efficient sparse coding algorithms that are based on iteratively solving two convex optimization problems: an L1-regularized least squares problem and an L2-constrained least squares problem. We propose novel algorithms to solve both of these optimization problems. Our algorithms result in a significant speedup for sparse coding, allowing us to learn larger sparse codes than possible with previously described algorithms. We apply these algorithms to natural images and demonstrate that the inferred sparse codes exhibit end-stopping and non-classical receptive field surround suppression and, therefore, may provide a partial explanation for these two phenomena in V1 neurons.

They then used this technique to produce a new machine learning algorithm called "Self Taught Learning". The paper is written by Honglak Lee, Alexis Battle, Rajat Raina, Benjamin Packer and Andrew Ng and is entitled Self-taught Learning: Transfer Learning from Unlabeled Data. The abstract reads:

We present a new machine learning framework called "self-taught learning" for using unlabeled data in supervised classification tasks. We do not assume that the unlabeled data follows the same class labels or generative distribution as the labeled data. Thus, we would like to use a large number of unlabeled images (or audio samples, or text documents) randomly downloaded from the Internet to improve performance on a given image (or audio, or text) classification task. Such unlabeled data is significantly easier to obtain than in typical semi-supervised or transfer learning settings, making self-taught learning widely applicable to many practical learning problems. We describe an approach to self-taught learning that uses sparse coding to construct higher-level features using the unlabeled data. These features form a succinct input representation and significantly improve classification performance. When using an SVM for classification, we further show how a Fisher kernel can be learned for this representation.

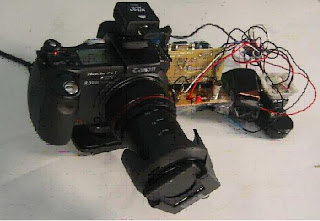

The subject of coded aperture in compressed sensing was mentioned before here and here. I found an other series of intriguing papers on Coded Aperture for Cameras. They do not perform Compressed Sensing per se, but it gives a pretty good view on how Compressed Sensing could be implemented. The project page is here, the paper is entitled Dappled Photography: Mask Enhanced Cameras for Heterodyned Light Fields and Coded Aperture Refocusing by Ashok Veeraraghavan, Ramesh Raskar, Amit Agrawal, Ankit Mohan and Jack Tumblin

The abstract reads:

The abstract reads:We describe a theoretical framework for reversibly modulating 4D light fields using an attenuating mask in the optical path of a lens based camera. Based on this framework, we present a novel design to reconstruct the 4D light field from a 2D camera image without any additional refractive elements as required by previous light field cameras. The patterned mask attenuates light rays inside the camera instead of bending them, and the attenuation recoverably encodes the rays on the 2D sensor. Our mask-equipped camera focuses just as a traditional camera to capture conventional 2D photos at full sensor resolution, but the raw pixel values also hold a modulated 4D light field. The light field can be recovered by rearranging the tiles of the 2D Fourier transform of sensor values into 4D planes, and computing the inverse Fourier transform. In addition, one can also recover the full resolution image information for the in-focus parts of the scene. We also show how a broadband mask placed at the lens enables us to compute refocused images at full sensor resolution for layered Lambertian scenes. This partial encoding of 4D ray-space data enables editing of image contents by depth, yet does not require computational recovery of the complete 4D light field.

A Frequently Asked Questions section on the two cameras is helpful to a person who has never heard of these 4D light fields cameras.

The 4D refers to the fact that we have 2D spatial information as for any image but thanks to the masking mechanism, one can also obtain information about where the light rays are coming from, i.e. the angle: the four dimensions therefore imply 2 spatial dimension (y,x) and 2 angles. Most interesting is the discussion on the Optical Heterodyning Camera. It points out (see figure above) that in normal cameras with lenses, one samples the restricted version of the full Fourier transform of the image field. In the heterodyning camera, the part of the Fourier transform that is normally cut off from the sensor is "heterodyned" back into the sensor. For more information, the derivation of the Fourier Transform of the Mask for Optical Heterodyning is here. One may foresee how a different type of sampling could provide a different Cramer-Rao bound on object range. One should notice that we already have a bayesian approach to range estimation from a single shot as featured by Ashutosh Saxena and Andrew Ng , while on the other hand in the Random Lens Imaging approach, one could already consider depth if some machine learning techniques were used.

The 4D refers to the fact that we have 2D spatial information as for any image but thanks to the masking mechanism, one can also obtain information about where the light rays are coming from, i.e. the angle: the four dimensions therefore imply 2 spatial dimension (y,x) and 2 angles. Most interesting is the discussion on the Optical Heterodyning Camera. It points out (see figure above) that in normal cameras with lenses, one samples the restricted version of the full Fourier transform of the image field. In the heterodyning camera, the part of the Fourier transform that is normally cut off from the sensor is "heterodyned" back into the sensor. For more information, the derivation of the Fourier Transform of the Mask for Optical Heterodyning is here. One may foresee how a different type of sampling could provide a different Cramer-Rao bound on object range. One should notice that we already have a bayesian approach to range estimation from a single shot as featured by Ashutosh Saxena and Andrew Ng , while on the other hand in the Random Lens Imaging approach, one could already consider depth if some machine learning techniques were used.Of related interest is the work of Ramesh Raskar and Amit Agrawal on Resolving Objects at Higher Resolution from a Single Motion-Blurred Image where now one uses random coded exposure i.e. random projection in time.

The abstract reads

Motion blur can degrade the quality of images and is considered a nuisance for computer vision problems. In this paper, we show that motion blur can in-fact be used for increasing the resolution of a moving object. Our approach utilizes the information in a single motion-blurred image without any image priors or training images. As the blur size increases, the resolution of the moving object can be enhanced by a larger factor, albeit with a corresponding increase in reconstruction noise.

Traditionally, motion deblurring and super-resolution have been ill-posed problems. Using a coded-exposure camera that preserves high spatial frequencies in the blurred image, we present a linear algorithm for the combined problem of deblurring and resolution enhancement and analyze the invertibility of the resulting linear system. We also show a method to selectively enhance the resolution of a narrow region of high-frequency features, when the resolution of the entire moving object cannot be increased due to small motion blur. Results on real images showing up to four times resolution enhancement are presented.

The project webpage is here. In traditional cameras, blurring occurs because the shutter remains open while the dynamic scene takes place.

In coded exposure, the shutter is open/closed while the dynamic scene occurs.

In coded exposure, the shutter is open/closed while the dynamic scene occurs. The research by Ramesh Raskar and Amit Agrawal shows that random coded exposure allows the retrieval of the static object.

The research by Ramesh Raskar and Amit Agrawal shows that random coded exposure allows the retrieval of the static object.

I believe this is the first time where random time coded imaging is used.

Unrelated: Laurent Duval me fait savoir que deux presentations auront lieu a Paris sur des sujets recontres sur ce blog. Elles auront lieu dans la serie de seminaires organises par Albert Cohen et Patrick Louis Combettes:

Demain, Mardi 22 janvier 2008,

À 11h30 : Jalil Fadili (ENSI, Caen) "Une exploration des problèmes inverses en traitement d'images par les représentations parcimonieuses"

Mardi 19 février 2008

À 10h15 : Yves Meyer (ENS, Cachan) "Échantillonnage irrégulier et «compressed sensing»"

No comments:

Post a Comment