Since the last Nuit Blanche in Review ( June 2015 ), we saw Pluto up close while at the same time, COLT and ICML occured in France. As a result, we had quite a few implementations made available by their respective authors. Enjoy !

Implementations:

- Forward - Backward Greedy Algorithms for Atomic Norm Regularization - implementation -

- Random Mappings Designed for Commercial Search Engines - implementation -

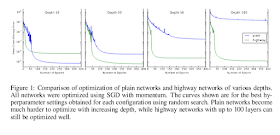

- Training Very Deep Networks - implementation -

- #ICML2015 code: MADE: Masked Autoencoder for Distribution Estimation - implementation -

- #ICML2015 codes: Generative Moment Matching Networks - implementation -

- #ICML2015 codes: Vector-Space Markov Random Fields via Exponential Families - implementation

- #ICML2015 codes: DeepVis, Deep Visualization Toolbox: Understanding Neural Networks Through Deep Visualization

- #ICML2015 codes: Probabilistic Backpropagation for Scalable Learning of Bayesian Neural Networks - implementation -

- Manopt 2.0: A Matlab toolbox for optimization on manifolds - implementation -

- Compressing Neural Networks with the Hashing Trick - implementation -

In-depth:

- A Perspective on Future Research Directions in Information Theory

- Dimensionality Reduction for k-Means Clustering and Low Rank Approximation

- Compressive Sensing for #IoT: Photoplethysmography-Based Heart Rate Monitoring in Physical Activities via Joint Sparse Spectrum Reconstruction, TROIKA

- A Nearly-Linear Time Framework for Graph-Structured Sparsity

- Near-Optimal Estimation of Simultaneously Sparse and Low-Rank Matrices from Nested Linear Measurements

- Compressed Sensing of Multi-Channel EEG Signals: The Simultaneous Cosparsity and Low Rank Optimization

- The Loss Surfaces of Multilayer Networks, Global Optimality in Tensor Factorization, Deep Learning, and Beyond , Generalization Bounds for Neural Networks through Tensor Factorization

- Optimal approximate matrix product in terms of stable rank

- Randomized sketches for kernels: Fast and optimal non-parametric regression

- Random forests and kernel methods

- Intersecting Faces: Non-negative Matrix Factorization With New Guarantees

Job:

Conferences:

Slides / Slides and Videos / Videos

Credit: NASA/Johns Hopkins University Applied Physics Laboratory/Southwest Research Institute- Saturday Morning Video: Reverse Engineering the Human Visual System Slides and Video: What's Wrong with Deep Learning?

- Video: Compressive Hyperspectral Imaging via Approximate Message Passing

- Video: Size- and level-adaptive Markov chain Monte Carlo

- Slides : #ICML2015 Tutorials: Structured Prediction, Bayesian Time Series Modeling, Natural Language Understanding, Policy Search, Modern Convex Optimization

- Slides #ICML2015 : Léon Bottou – Two high stakes challenges in machine learning

- Saturday Morning Videos: Information Theory in Complexity Theory and Combinatorics (Simons Institute @ Berkeley)