Someone fancied so much last week's "This Week in Compressive Sensing" that it got put on HackerNews sending trove of newcomers to the subject. The traffic was impressive, five continents watching this entry at one point in time, wow.

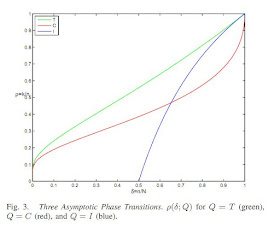

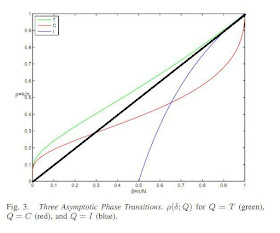

This week started with the aftermath of the stunning finding of last Friday. In order to make it clear to some people I wrote a small summary of what that meant. There is more obviously and I will talk about this over time for sure. One item stood out though, it was the videos of the SPARS meeting sent by Jared Tanner. One of them is (the first I think) a video of David Donoho who is now advocating changing the units in the phase diagram (this is very much welcome). I also noted the fascinating mention by him that Belief Propagation acted as some sorts of ensemble algorithm (check several solutions at a time). This is the first time I heard how the algorithm worked in such a clear fashion.

This week, several papers appeared on arxiv that are related to compressive sensing, they are:

Robust Sparse Analysis Regularization by Samuel Vaiter, Gabriel Peyré, Charles Dossal, Jalal Fadili . The abstract reads:

On Variable Density Compressive Sampling by Gilles Puy, Pierre Vandergheynst, Yves Wiaux. The abstract reads:

The Statistical Inefficiency of Sparse Coding for Images (or, One Gabor to Rule them All) by James Bergstra, Aaron Courville, Yoshua Bengio. The abstract reads:

Liked this entry ? subscribe to the Nuit Blanche feed, there's more where that came from

Liked this entry ? subscribe to the Nuit Blanche feed, there's more where that came from

Robust Sparse Analysis Regularization by Samuel Vaiter, Gabriel Peyré, Charles Dossal, Jalal Fadili . The abstract reads:

This paper studies the properties of L1-analysis regularization for the resolution of linear inverse problems. Most previous works consider sparse synthesis priors where the sparsity is measured as the L1 norm of the coefficients that synthesize the signal in a given dictionary. In contrast, the more general analysis regularization minimizes the L1 norm of the correlations between the signal and the atoms in the dictionary. The corresponding variational problem includes several well-known regularizations such as the discrete total variation and the fused lasso. We first prove that a solution of analysis regularization is a piecewise affine function of the observations. Similarly, it is a piecewise affine function of the regularization parameter. This allows us to compute the degrees of freedom associated to sparse analysis estimators. Another contribution gives a sufficient condition to ensure that a signal is the unique solution of the analysis regularization when there is no noise in the observations. The same criterion ensures the robustness of the sparse analysis solution to a small noise in the observations. Our last contribution defines a stronger sufficient condition that ensures robustness to an arbitrary bounded noise. In the special case of synthesis regularization, our contributions recover already known results, that are hence generalized to the analysis setting. We illustrate these theoritical results on practical examples to study the robustness of the total variation and the fused lasso regularizations.

On Variable Density Compressive Sampling by Gilles Puy, Pierre Vandergheynst, Yves Wiaux. The abstract reads:

We advocate an optimization procedure for variable density sampling in the context of compressed sensing. In this perspective, we introduce a minimization problem for the coherence between the sparsity and sensing bases, whose solution provides an optimized sampling profile. This minimization problem is solved with the use of convex optimization algorithms. We also propose a refinement of our technique when prior information is available on the signal support in the sparsity basis. The effectiveness of the method is confirmed by numerical experiments. Our results also provide a theoretical underpinning to state-of-the-art variable density Fourier sampling procedures used in magnetic resonance imaging.

The Statistical Coherence-based Theory of Robust Recovery of Sparsest Overcomplete Representation by

Lianlin Li. The abstract reads:

The recovery of sparsest overcomplete representation has recently attracted intensive research activities owe to its important potential in the many applied fields such as signal processing, medical imaging, communication, and so on. This problem can be stated in the following, i.e., to seek for the sparse coefficient vector of the given noisy observation over a redundant dictionary such that, where is the corrupted error. Elad et al. made the worst-case result, which shows the condition of stable recovery of sparest overcomplete representation over is where . Although it's of easy operation for any given matrix, this result can't provide us realistic guide in many cases. On the other hand, most of popular analysis on the sparse reconstruction relies heavily on the so-called RIP (Restricted Isometric Property) for matrices developed by Candes et al., which is usually very difficult or impossible to be justified for a given measurement matrix. In this article, we introduced a simple and efficient way of determining the ability of given D used to recover the sparse signal based on the statistical analysis of coherence coefficients, where is the coherence coefficients between any two different columns of given measurement matrix . The key mechanism behind proposed paradigm is the analysis of statistical distribution (the mean and covariance) of . We proved that if the resulting mean of are zero, and their covariance are as small as possible, one can faithfully recover approximately sparse signals from a minimal number of noisy measurements with overwhelming probability. The resulting theory is not only suitable for almost all models - e.g. Gaussian, frequency measurements-discussed in the literature of compressed sampling, but also provides a framework for new measurement strategies as well.

Distributed sensor failure detection in sensor networks by Tamara Tosic, Nikolaos Thomos, Pascal Frossard. The abstract reads:

We investigate distributed sensors' failure detection in networks with a small number of defective sensors. We assume that sensors measure a smooth physical phenomenon and that defective sensors' measurements significantly differ from neighboring sensor measurements. We consider that the defective sensors are well represented by binary sparse signals. We build on the sparse nature of the binary sensor failure signals and propose a new detection algorithm based on Group Testing (GT). The distributed GT algorithm estimates the set of defective sensors from a small number of linearly independent binary messages with a simple distance decoder. Furthermore, we theoretically determine the lower bound of the minimal number of linearly independent messages needed for detection guarantees in case of a single defective sensor. We show through experimentation that the number of messages required for successful detection is in practice much smaller for small and medium sized networks. We extend our framework to the detection of multiple failures case by modifying appropriately the message exchange protocol and the decoding procedure. The employed decoder is of low complexity and is robust to noisy messages. The overall method is furthermore resilient to the network dynamics because of our gossip-based message dissemination protocol. We provide results for both regular and irregular network topologies. Given a network setup, we provide parameter selection rules that improve the detection accuracy. Simulations demonstrate that in terms of detection performance the proposed method outperforms methods based on random walk measurements collection. Our method performs detection in less system rounds time, but it requires larger communication overhead compared to random walk based algorithms that collect network measurements.

Reduced-Dimension Multiuser Detection by Yao Xie, Yonina C. Eldar, Andrea Goldsmith. The abstract reads:

We explore several reduced-dimension multiuser detection (RD-MUD) structures that significantly decrease the number of required correlation branches at the receiver front-end, while still achieving performance similar to that of the conventional matched-filter (MF) bank. RD-MUD exploits the fact that the number of active users is typically small relative to the total number of users in the system and relies on ideas of analog compressed sensing to reduce the number of correlators. We first develop a general framework for both linear and nonlinear RD-MUD detectors. We then present theoretical performance analysis for two specific detectors: the linear reduced-dimension decorrelating (RDD) detector, which combines subspace projection and thresholding to determine active users and sign detection for data recovery, and the nonlinear reduced-dimension decision-feedback (RDDF) detector, which combines decision-feedback orthogonal matching pursuit for active user detection and sign detection for data recovery. The theoretical performance results for both detectors are validated via numerical simulations.

The Statistical Inefficiency of Sparse Coding for Images (or, One Gabor to Rule them All) by James Bergstra, Aaron Courville, Yoshua Bengio. The abstract reads:

Sparse coding is a proven principle for learning compact representations of images. However, sparse coding by itself often leads to very redundant dictionaries. With images, this often takes the form of similar edge detectors which are replicated many times at various positions, scales and orientations. An immediate consequence of this observation is that the estimation of the dictionary components is not statistically efficient. We propose a factored model in which factors of variation (e.g. position, scale and orientation) are untangled from the underlying Gabor-like filters. There is so much redundancy in sparse codes for natural images that our model requires only a single dictionary element (a Gabor-like edge detector) to outperform standard sparse coding. Our model scales naturally to arbitrary-sized images while achieving much greater statistical efficiency during learning. We validate this claim with a number of experiments showing, in part, superior compression of out-of-sample data using a sparse coding dictionary learned with only a single image.